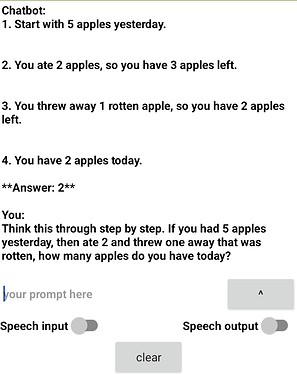

This project uses Google's 'gemini-pro' LLM to make a simple chatbot. This is the same LLM used by Google Bard starting in December of 2023. It is comparable to ChatGPT 3.5.

You can request and API key from Google AI Studio at https://makersuite.google.com/app/apikey

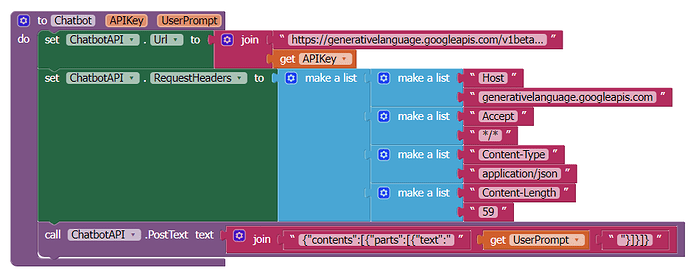

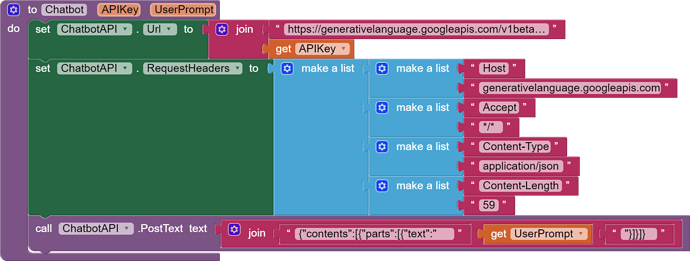

The app uses the HTTP POST method of sending and receiving data to and from the API (generativelanguage.googleapis.com). How that works can be seen in the 'Chatbot' procedure in this app.

Header information is sent to the API URL in the 'RequestHeaders' block followed by a messages section in the 'PostText' block that sends a prompt to the API on the web. I was able to determine the POST headers by extracting them from a curl API example for this service:

curl --trace-ascii curltorawpost2.txt -H 'Content-Type: application/json' -d '{"contents":[{

"parts":[{"text":"What is a Yoda quote?"}]}]}' -X POST https://generativelanguage.googleapis.com/v1beta/models/gemini-pr

o:generateContent?key=(your api key here)

The '--trace-ascii curltorawpost2.txt' part sends raw POST output from the curl command to the host service to the text file curltorawpost2.txt.

The relevant parts of that output are as follows:

0000: POST /v1beta/models/gemini-pro:generateContent?key=AIzaSyDFbcSc4

0040: D2-mNg2Z0CSumchsSHXt3IK02Q HTTP/1.1

0065: Host: generativelanguage.googleapis.com

008e: User-Agent: curl/8.4.0

00a6: Accept: /

00b3: Content-Type: application/json

00d3: Content-Length: 59

00e7:

=> Send data, 59 bytes (0x3b)

0000: {"contents":[{"parts":[{"text":"What is a Yoda quote?"}]}]}

where the App inventor Web component 'Url' block uses the 'https://generativelanguage.googleapis.com/v1beta/models/gemini-pro:generateContent?key=(your api key)' and the 'RequestHeaders' block contains the Host, Accept, Content-Type, and Content-Length information.

The PostText block contains the message information that includes the prompt to the LLM -- in this case "What is a Yoda quote?" that would be stored in the 'UserPrompt' variable.

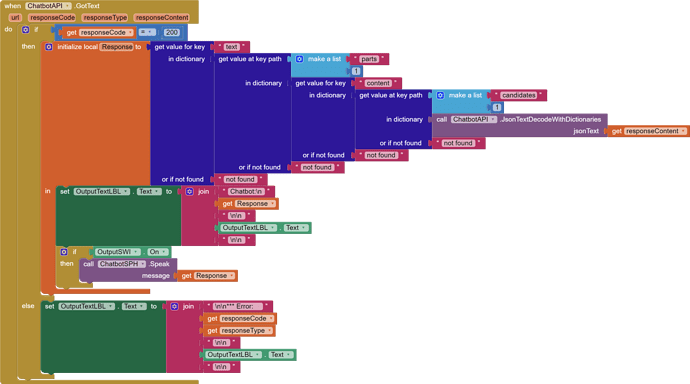

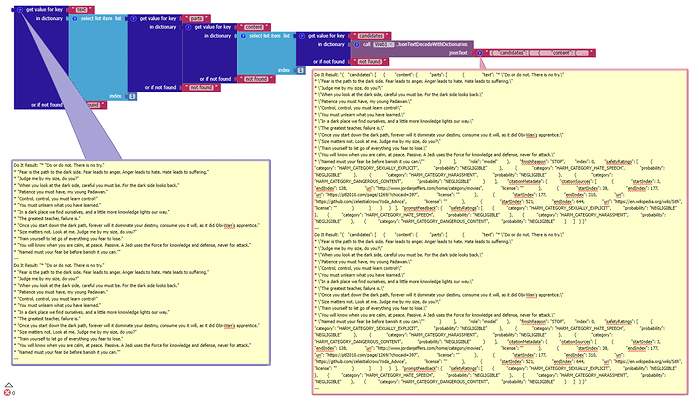

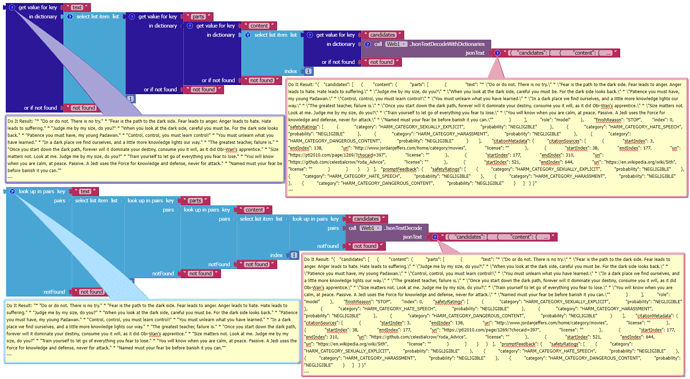

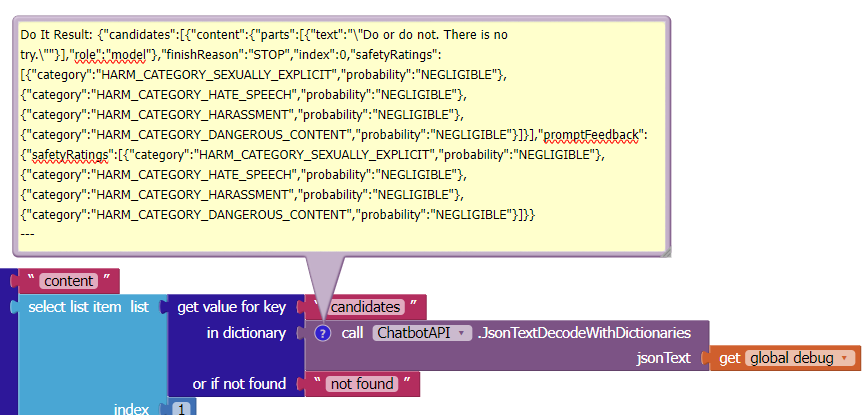

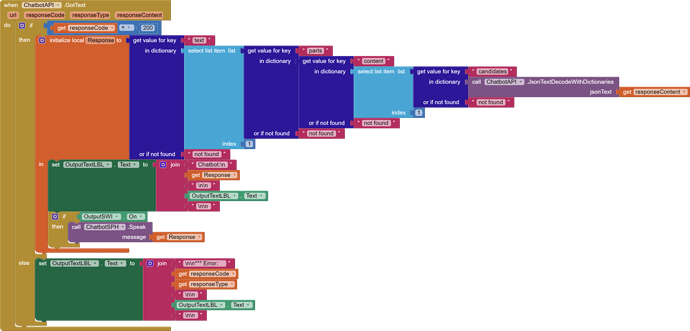

The 'ChatbotAPI.GotText' event block receives the reply embedded in a json string which is then extracted and displayed on the device screen and optionally spoken using the text-to-speech component. I have not learned how to use the Dictionary blocks yet. Some of you could no doubt simplify accessing the embedded response which is linked to a "text" key label.

For fun I added speech input as well, so the app user and chatbot can have a voice conversation (the user must press the record button each time they speak, however, sort of like a walkie-talkie.)

Here's is version 2 of project modified 12/20/23 at 7:55pm CST. Changes: select list item blocks replaced with Dictionary blocks for the response content:

Gemini_ProV2.aia (6.6 KB)

END