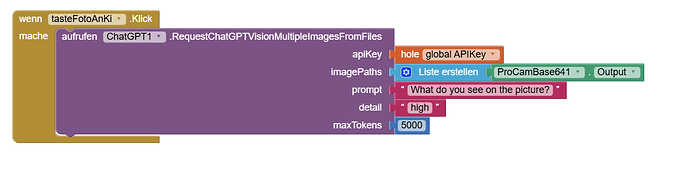

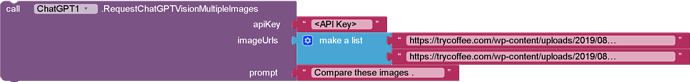

Hi @Black_Knight , I have just tried to use the GPT Vision using your extension with this code:

and I am getting the error message:

{ "error": { "message": "The model gpt-4-vision-preview has been deprecated, learn more here: https://platform.openai.com/docs/deprecations", "type": "invalid_request_error", "param": null, "code": "model_not_found" }}

Could you please switch the default model for Vision functions to a valid one or provide a parameter for model selection?

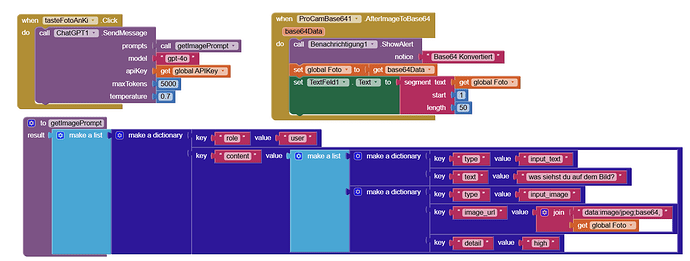

I have also tried to pass the images to gpt-4o this way:

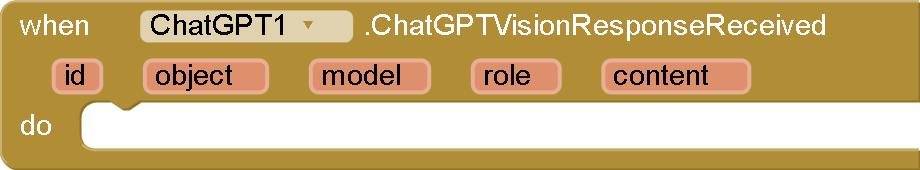

but I am getting the error message: "No value for id" in this case.

Could you please provide a way to use GPT Vision again as this was the reason why I bought your extension.

The first issue is because the model was deprecated,

So I have updated the aix and will send the extension for you at pm but first send the payment transaction ID of the extension

And for the second one

Here is how to use the block

I will contact you on PM for the fix.

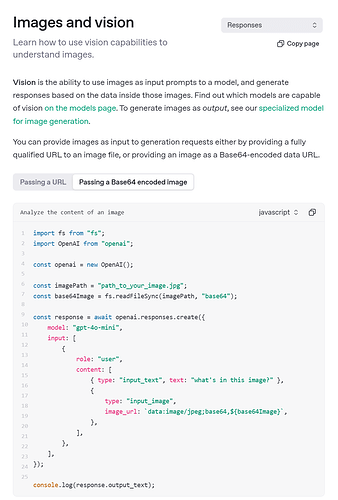

My idea with the second solution was to simply follow the structure from the OpenAI Documentation:

https://platform.openai.com/docs/guides/images?api-mode=responses&lang=javascript&format=base64-encoded

This could have worked when serialization would be used in the extension to map any structure to a JSON - just an idea which I wanted to share!

The vision blocks was already existed I didn't remove them .

ChatGPT extension updated by adding new models

ChatGPT extension updated by adding new models

you can now use these models :

gpt-4.1

gpt-4.1-mini

gpt-4.1-nano

o3

o4-mini

In this post and audio will calrify the difference between "ChatGPT Assistant Extension" and "ChatGPT extension"

Hallo. I payed now an I tried app. It's very difficult to insert the LONG API key in the first page! It is possible to record the key directly in the block of the App?

Hello @sparta ,

Thank you for your payment — I really appreciate it!

I think you purchased this one

Not chatGPT extension they are different

But

Could you please clarify your issue a bit more? I'm not entirely sure what you mean.

If you're looking for the ChatGPT API key, you can generate one from the following link:

https://platform.openai.com/api-keys

I hope this ChatGPT extension helps take your apps to the next level!

You can create variable called API key and you can use it inside all methods blocks ,

In the next updated I will add setter and getter functions the set API key one single time , so no need to set the API key each time you use block method!

Dear Ahmed, thank you very match for you reply!

Obviously, I have an API key, but it's very difficult to copy in te APP a very long string without error...

Do you have some simplest solution?

King regards

Santi

Web browser copy paste Ctrl C and Ctrl V

I don't understand what do you mean , so I can't recommend a solution,

Please show with images !

Hallo. I buyed your extension to analyze images, but unfortunately the .aix don't have this function!n Why?

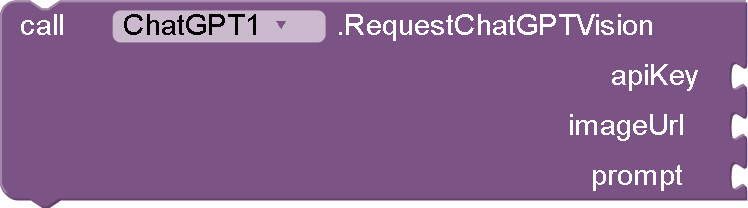

Check this please

Also check these blocks

Function: RequestChatGPTVision(String apiKey, String imageUrl, String prompt)

Purpose: This function sends a request to OpenAI's ChatGPT vision API to analyze an image and provide insights based on the given prompt.

Parameters:

- apiKey: Your OpenAI API key.

- imageUrl: The URL of the image to analyze.

- prompt: A text prompt to guide the analysis (e.g., "What's in this image?").

Function: RequestChatGPTVisionMultipleImages(String apiKey, YailList imageUrls, String prompt)

Purpose: This function sends a request to OpenAI's ChatGPT vision API to analyze multiple images and provide insights based on the given prompt.

Parameters:

- apiKey: Your OpenAI API key.

- imageUrls: A YailList containing the URLs of the images to analyze.

- prompt: A text prompt to guide the analysis (e.g., "Compare these images").

RequestChatGPTVisionFromFile

Purpose: Analyzes a single local image file and provides insights based on a text prompt.

Parameters:

- apiKey: Your OpenAI API key.

- imagePath: The file path of the image to analyze.

- prompt: A text prompt to guide the analysis (e.g., "What's in this image?").

- detail: The desired level of detail for the analysis (

low,high, orauto).- maxTokens: The maximum number of tokens allowed in the API response.

RequestChatGPTVisionMultipleImagesFromFile

Purpose: Analyzes multiple local image files and provides insights based on a text prompt.

Parameters:

- apiKey: Your OpenAI API key.

- imagePaths: A YailList containing the file paths of the images to analyze.

- prompt: A text prompt to guide the analysis (e.g., "Compare these images").

- detail: The desired level of detail for the analysis (e.g., "high").

- maxTokens: The maximum number of tokens allowed in the API response.

Events:

@sparta also check this section

Sorry, but in my extension we have NO BLOCKS for VISION!

please , check the extension file, not in the AIA file ,

in the aia file is old version

You can PM me with your transaction payment ID and I will send the aix file for you