I'm scraping some NOAA data in JSON format using their WMM API. Scaping the data no issues.

I'd like to grab all the declination data from the JSON and export it to a CSV table. At the moment, I'm walking the "declination" data into list and then splitting the list with a \n.

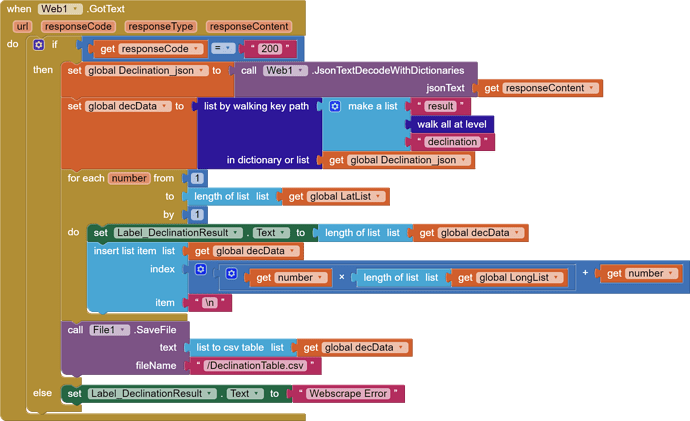

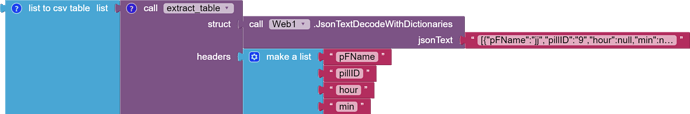

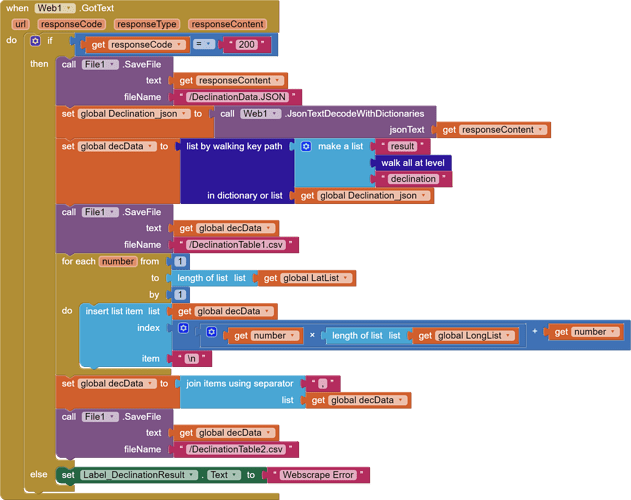

These are the principal blocks:

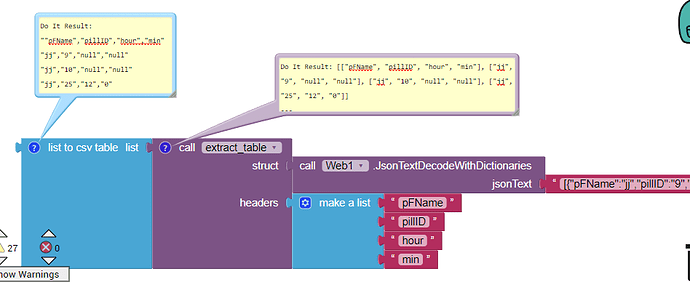

It's "working", however I believe i'm actually creating a list of lists here. When I read the CSV data, each declination value i've scraped is in "" and therefore each value is in its own row. The \n i'm inserting is then just inserting a blank row.

When I walk the dictionary, am I doing it in the right way and simply need to convert my list of lists back to a single list, or is there a better way to send the JSON "declination" values straight into a single list?

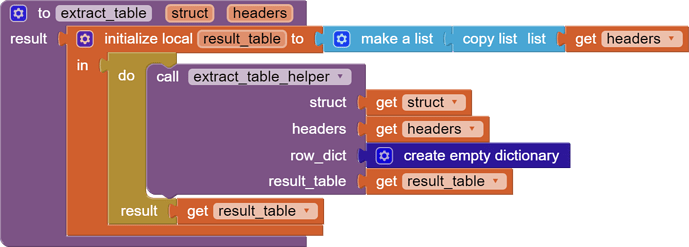

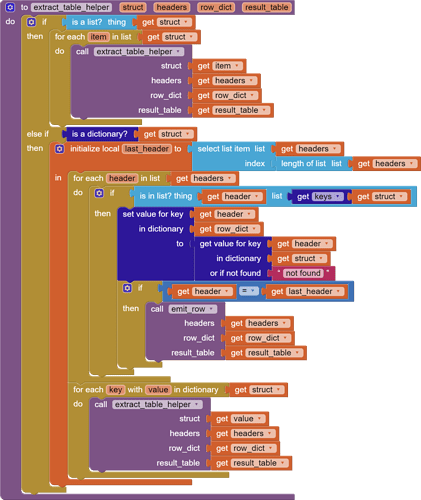

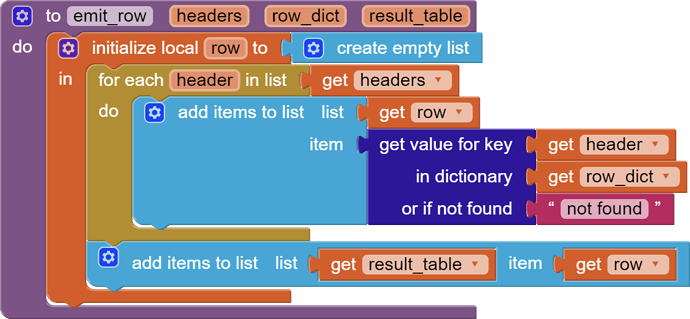

Bonus points for any hints on simplfying the process of getting the JSON scrape directly into the CSV.

Here is an example of the JSON for reference:

declination{

"model":"WMM-2020",

"version":"0.5.1.11",

"result":[ {

"date": 2023.7479,

"elevation": 0,

"declination": -21.33346,

"latitude": 85,

"declination_sv": -2.7678,

"warning": "Warning: location is in the blackout zone around the magnetic pole as defined by the WMM military specification (https://www.ngdc.noaa.gov/geomag/WMM/data/MIL-PRF-89500B.pdf). Compass accuracy is highly degraded in this region.",

"declination_uncertainty": 5.03157,

"longitude": -180

},

{

"date": 2023.7479,

"elevation": 0,

"declination": -19.87348,

"latitude": 85,

"declination_sv": -2.58591,

"warning": "Warning: location is in the blackout zone around the magnetic pole as defined by the WMM military specification (https://www.ngdc.noaa.gov/geomag/WMM/data/MIL-PRF-89500B.pdf). Compass accuracy is highly degraded in this region.",

"declination_uncertainty": 4.9491,

"longitude": -175

},

{

"date": 2023.7479,

"elevation": 0,

"declination": -18.69972,

"latitude": 85,

"declination_sv": -2.40869,

"warning": "Warning: location is in the blackout zone around the magnetic pole as defined by the WMM military specification (https://www.ngdc.noaa.gov/geomag/WMM/data/MIL-PRF-89500B.pdf). Compass accuracy is highly degraded in this region.",

"declination_uncertainty": 4.93419,

"longitude": -170

},