Thank you so much for your response. Although, the problem is my code (just few answers above). When trying to use the sequence that is shifting the bits when deleting one of them, the program should decode the sequence into normal text, and it's not working. I tried both parts separately and it works, but joint not. That's the issue.

Are you doing the kind of decoding that requires an ASCII table?

Exactly! The program itself tries to receive a binary sequence constantly and when it receives the header, the program starts to decide with an ASCII table the message that follows the header (i also thought about using a some bits to show the size of the message to determine when we start to finish the decoding and start again waiting for a new header

You mentioned that you were using a header of '11111111' (8 1's) to mark the start of the text.

Your binString text block starts with 14 0's before the 8 bit header.

So I am guessing you are watching some bit stream but came in in the middle of transmission, and don't have access to any byte delimitting markers that you would get in serial traffic, where the front edge of a byte is stretched out in time?

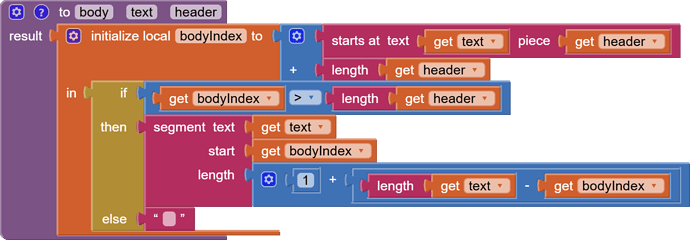

In that case, you could use the text block that searches for the index in a longer text (binString) of a given short piece of text ('11111111'). Once you have that non-zero index, you can use that to clip off the header and the pre-header, leaving only the stuff past the header.

(Same logic as my left shift, but use the index+7 instead of 1.)

Then take the remainder and eightify it, split at spaces, and do the lookups of the list items in your incomplete ascii table, using '?' as a not found value to keep output neat and aligned right.

That's it, I'm trying to obtain bits by lightsensors (if it's on, '1', if it's off, '0') determined by an arbitrary threshold (I'm using 500 as a thr, but could be every number above 300), and after getting them, I'm trying to decode them as a text. I've made a Python programm that uses all of it, maybe is more clarifying:

__________________________________________________________________________

import RPi.GPIO as GPIO

import time

from gpiozero import LightSensor

# Define the GPIO pin connected to the light sensor

sensor = LightSensor(4) # Use your actual GPIO pin number here

# Define the binary header we're waiting for

HEADER = "11111111"

# Define the bit reading interval in seconds (e.g., 0.1s = 100ms per bit)

BIT_DURATION = 0.1

# ASCII table: mapping binary strings to ASCII characters

ascii_table = {format(i, '08b'): chr(i) for i in range(256)} # '00000000' -> '\x00', ..., '01100001' -> 'a', etc.

def read_bit():

"""

Reads a single bit from the light sensor.

If light is detected → returns '1' (flash ON)

If no light → returns '0' (flash OFF)

"""

sensor.wait_for_light(timeout=BIT_DURATION)

value = '1' if sensor.light_detected else '0'

time.sleep(BIT_DURATION)

return value

def wait_for_header():

"""

Continuously reads bits from the sensor until the 8-bit header '11111111' is detected.

"""

buffer = ""

print("Waiting for header...")

while True:

bit = read_bit()

buffer += bit

buffer = buffer[-8:] # Keep only the last 8 bits

if buffer == HEADER:

print("[Header detected]")

return

def read_n_bits(n):

"""

Reads exactly n bits from the sensor and returns them as a string.

"""

bits = ""

for _ in range(n):

bit = read_bit()

bits += bit

return bits

def bits_to_text(bits):

"""

Converts a binary string to ASCII text using the ascii_table.

It processes 8 bits at a time.

"""

text = ""

for i in range(0, len(bits), 8):

byte = bits[i:i+8]

char = ascii_table.get(byte, '?') # Replace unknown sequences with '?'

text += char

return text

def main():

while True:

wait_for_header() # Step 1: Wait for the '11111111' header

# Step 2: Read the next 8 bits → message length (in bits)

length_bits = read_n_bits(8)

message_length = int(length_bits, 2)

# The length must be a multiple of 8 for full ASCII characters

if message_length % 8 != 0:

print("[Invalid message length. Must be multiple of 8 bits.]")

continue

print(f"[Message length detected: {message_length} bits, {message_length // 8} characters]")

# Step 3: Read the message bits

message_bits = read_n_bits(message_length)

# Step 4: Decode the message

message = bits_to_text(message_bits)

print(f"[Decoded message]: {message}")

print("Waiting for next message...\n")

if __name__ == "__main__":

main()

__________________________________________________________________________

def bit_stream():

"""

Simulates a test bit stream:

Header: 11111111

Message length: 00111000 (56 bits = 7 bytes)

Message: "Hello my name is Mario"

"""

return iter(

"11111111" + # Header

"00011000" + # Length = 24 characters = 192 bits

"01101000" + # H

"01100101" + # e

"01101100" + # l

"01101100" + # l

"01101111" + # o

"00100000" + # (space)

"01101101" + # m

"01111001" + # y

"00100000" + # (space)

"01101110" + # n

"01100001" + # a

"01101101" + # m

"01100101" + # e

"00100000" + # (space)

"01101001" + # i

"01110011" + # s

"00100000" + # (space)

"01001101" + # M

"01100001" + # a

"01110010" + # r

"01101001" + # i

"01101111" # o

)

__________________________________________________________________________

That should be how it should work the hole programm :).

Thank you so much for all the feedback recieved

So what's the question?

I'm answering that statement: "So I am guessing you are watching some bit stream but came in in the middle of transmission, and don't have access to any byte delimitting markers that you would get in serial traffic, where the front edge of a byte is stretched out in time?"

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.

Hi everyone,

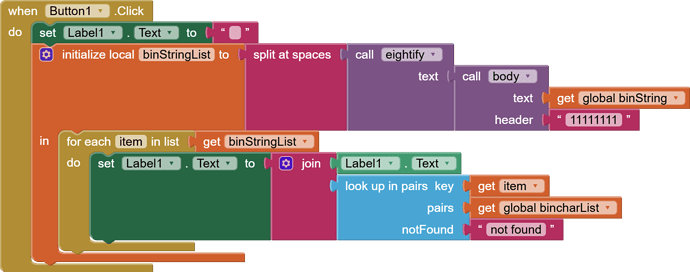

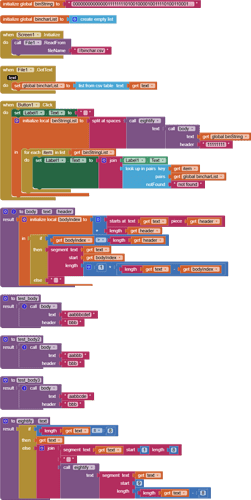

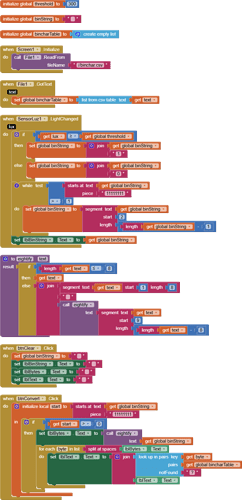

I'm working on a communication system using MIT App Inventor that includes a modulator and a demodulator. The modulator side is already completed: it takes a text message, converts each character to an 8-bit binary string using an ASCII dictionary, and adds a header 11111111 at the beginning. The entire binary string is then transmitted using the phone's Flash (1 = on, 0 = off).

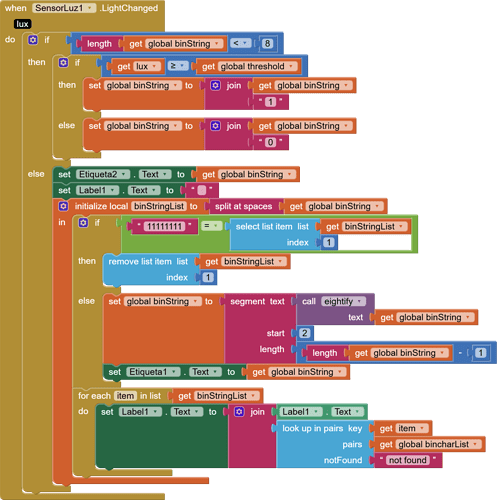

Now, I'm developing the demodulator, which uses the light sensor to detect flashes. My approach is:

- Constantly read values from the light sensor and convert them into binary (1s and 0s) depending on brightness.

- Use a sliding window of 8 bits to search for the header

11111111. - Once the header is found, I read the next 8-bit chunks and decode them using the same ASCII dictionary to reconstruct the original message.

Here's the issue: the sliding window mechanism I've implemented seems to be collecting only 7 bits instead of 8, which means the header is never properly detected and decoding never starts.

I’ve attached my .aia file in case someone is willing to look at the blocks directly.

Has anyone dealt with a similar issue or knows how to make sure my sliding window correctly maintains 8 bits every time?

Thanks a lot in advance!

binaryToText4 (3).aia (9.8 KB)

You are trying to do serial communication without setting a common baud rate.

See Basics of UART Communication for an example of a serial protocol.

Since you lack a Clock,

you can't set a baud rate to tell whether or not you have one really slow and long bit, versus a series of bits with the same value.

In the very slight chance that your transmitter and receiver work at the same rate, here's a decoder:

binaryToText43_1.aia (9.3 KB)

that's exactly what i was trying yes, thank you so much. Now i only need a way to determine in which moment should i stop decoding, maybe sending the size of the message through the data or something like this. Anyways, again, thx so much, it was really helpfull

Stop at a common end character like 00000000 or line feed

ok, do so then, thks ![]()

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.

Hello again,

Following up on a previous discussion in another forum, I'm working on a demodulator for a communication protocol. The data stream I receive has a specific structure:

- An 8-bit header consisting of all ones (

11111111). - The actual message, which can be of variable length and contains a sequence of ones and zeros.

- A trailing sequence of zeros to indicate the end of the transmission.

Currently, my demodulator logic is capturing the entire data stream, including the header of ones and the trailing zeros. I need help modifying the logic so that

- After detecting the 8-bit header of ones, it starts decoding and storing the subsequent bits as the message in text format.

- It stops storing bits and ideally displays only the extracted message when it encounters the trailing sequence of 8 zeros. The header of ones and the trailing zeros should not be part of the final displayed message.

Could anyone provide guidance or example blocks on how to implement this kind of filtering and extraction logic in MIT App Inventor 2? I'm thinking about using the same logic used in the header, but all the attempts failed, and I'm not sure about the most efficient or correct way to end of the message based on the trailing zeros.

Any suggestions or examples would be greatly appreciated!

Thank you in advance for your assistance.

Mobile.aia (73.5 KB)

Hello everyone,

I'm working on an application where I need to map binary codes to characters, including numbers, letters (uppercase and lowercase), and various special symbols. For this, I'm using the TableText component and loading a CSV file with the format binary_code,character.

I've noticed that some special symbols seem to be causing issues or are not being interpreted correctly when the CSV file is read into the application. The binary codes I'm using for these symbols are (examples based on our conversation):

- Space:

00100000 - Double quote:

00100010 - Percent sign:

10010100 - Apostrophe:

00100111 - Comma:

00101100 - Semicolon:

00111011

And so on...

My CSV file has a structure like this (partial example):

Has anyone else experienced similar problems when working with special symbols in CSV files for the TableText component? Are there any special considerations regarding how these symbols should be encoded or escaped in the CSV file so that MIT App Inventor 2 interprets them correctly?

Any guidance or suggestions on how to resolve this issue and ensure that all characters, including the special symbols, are mapped correctly from the binary code would be greatly appreciated.

binchar.csv (1.1 KB)

This is the error i'm recieving: Lookup in pairs: the list [["00110001","1"]...] is not a well-formed list of pairs

Thank you very much for your help!