Did you specify what the data are? For example, you have to specify "Weight: 50kg" instead of directly "50".

Hello everyone,

I have updated this extension to ![]() version 5, including some new blocks and functions.

version 5, including some new blocks and functions.

New blocks

I have added the functionality to stream chat responses. It's like the Chat block but this streams the responses, like the typing view you see in ChatGPT so that you don't have to wait until OpenAI finishes generating everything.

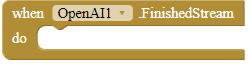

FinishedStream

This event is fired when all chunks of the stream are returned via the GotStream event.

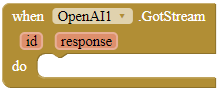

GotStream

This event is fired when OpenAI has responded to your stream request. The response parameter is the responded chunk.

Parameters: id = number (int), response = text

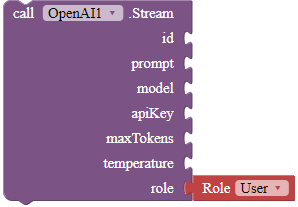

Stream

Functions the same as the Chat block, but gets the response as chunks so that you do not have to wait until OpenAI finishes generating your response. This is like the "typing" view you see in ChatGPT. The id parameter is just for you to keep track of the response; you can set it to any integer. The response won't be affected by the ID parameter. For the other parameters please check the documentation for the Chat block.

Credit to @David_Ningthoujam for reference and his help.

Parameters: id = number (int), prompt = text, apiKey = text, maxTokens = text, temperature = number (double)

New functions

Added the role parameter in the Chat and the Stream block. Use this parameter to specify who you want to talk as, such as talking as a user or system. By default set this to User. Thank you @Darko!

I must also thank @Darko for providing me with their API key so that I can test this update. Thank you Darko!

Note: I have exams in early June. I am planning to stop updating this extension for a while so that I can focus on my exams. Starting from tomorrow, I will stop updating this extension until my exams end. You can still reply here, and I will still see your messages, but until June 16, 2023, I will not update this or any of my extensions.

Hi @gordonlu310,

thanks for the update!

I am trying to make this work as "conversation", meaning that new questions are like additions to previouse ones, and that GPT is aware of previous questions and answares.

Is this somehow possilbe?

It's not possible directly via the OpenAI API. It's possible to maintain the context of the conversation, but I will have to send a lot of messages to the bot and therefore spend a lot of unnecessary tokens. Looking for another solution later.

Well done. Was waiting for this update

Hi all, a question for the new stream block: the block returns the text in parts, chunks I think. How can I join the parts in order to make sense of the text by eliminating the spaces? I hope I was able to explain myself. Thank you

Hi Dario.

Yes.

I'm not sure what you mean by "eliminating the spaces". Could you please elaborate? Thanks.

Interesting. Did you add spaces to join the texts together? The extension does not add spaces between chunks artificially. I didn't experience the same problem while testing with English, but I believe it might be a problem with the API itself when it tries to chunk the text.

I haven't added spaces, but I've used "trim" to try to remove any spaces generated in the chunks, but it doesn't seem to work. Do you think it is due to the language used then? (Italian). In case I try with prompt in English...

I don't know; this is only my guess. The model is probably better trained with English.

try adding a condition to ignore if the response is empty.

Hello, my extension doesn't have the model puzzle, can you send the link for the extension?

Check the newest version (the documentation). The model block has been removed.

please post the code don't know why im getting "[ """]"" in every answer please help me to solve this problem , please kindly post the block so that i can do it ,, its for a competition please help me out

Which code?

You can find the test AIA in the topic. Did you specify a correct API key?

That's usually an indication that you are treating a list as a piece of text, instead of selecting items from the list.

Hi Gordon, with some requests I get the following error: expected literal value at characther 0. Could it depend on the length of the prompt or on the presence of particular characters in the prompt? (for example : )

Thank you

Hi Dario,

Do you have any double-quotes (") in your prompt? If so, the extension will fail to process the prompt; you should replace double-quotes with single-quotes (').

Hi Gordon, I think I fixed it. I wasn't using ". I've noticed that with multi-sentence prompts, I often get the error.

For example, with: 'write an article by [ ] with introduction, context, table of contents and summary' does not return the error, but with: 'write an article by [ ]. Write an introduction, the context, a table of context and a summary', the error returns.

In part, I think, it also depends on the length of the text of the variable [ ]. However, I solved it by creating a simpler and more linear request. Of course these are just my guesses.