You're welcome!

Continuing the discussion from [F/OS]  Artificial Intelligence and OpenAI!:

Artificial Intelligence and OpenAI!:

I can give you API key . contact me

Thanks, but I've already gotten 2 keys. Sorry for the inconvenience.

Bonjour

Est-ce que le component pour générer une image fonctionne?

Hello everyone,

Just an update of what I'm doing.

Updated the Chat block to GPT 3.5 and GPT 4. You can choose from different 3.5 and 4 models.

Updated the Chat block to GPT 3.5 and GPT 4. You can choose from different 3.5 and 4 models.

Fixed the GenerateImage error!

Fixed the GenerateImage error!

Checked the EditText block, still working.

Checked the EditText block, still working.

All I have to do is to add a moderation block that allows you to check if there are any issues with a piece of text, such as offensive language.

Hello everyone,

Sorry for the late reply. I have finally updated this extension to ![]() version 4, with bug fixes, new features and new blocks!

version 4, with bug fixes, new features and new blocks!

![]() New features

New features

-

Fixed the image generation error! It now should work as usual.

-

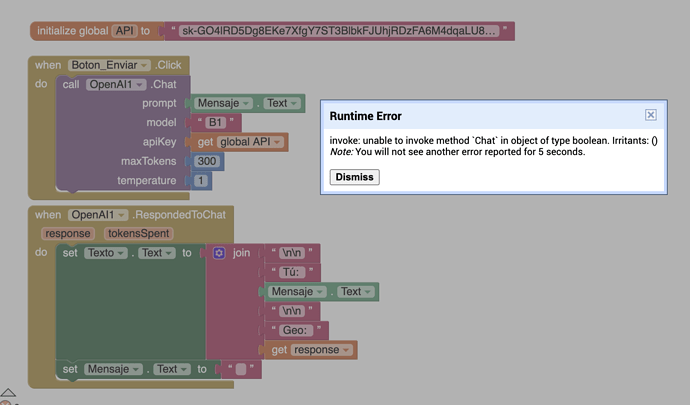

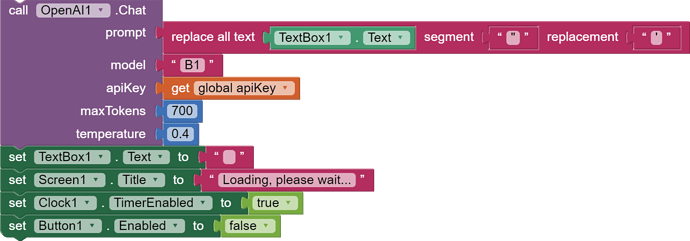

Updated the Chat block to the latest GPT 3.5 and GPT 4. You will no longer be able to use the GPT 3 models if you update this extension. Unfortunately, because of naming conventions and naming issues (no dots and hyphens allowed) I'm unable to put a helper block for this. The

Modelhelper block should disappear; please now use this list for themodelparameter. For example, if I want to use the modelgpt-4, I would enterB1as themodelparameter.A1: gpt-3.5-turbo

A2: gpt-3.5-turbo-0301B1: gpt-4

B2: gpt-4-0314

B3: gpt-4-32k

B4: gpt-4-32k-0314

![]() New blocks

New blocks

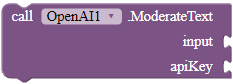

ModerateText

The moderation API is a service provided by OpenAI that allows you to check whether a text contains inappropriate or offensive information. Put the text that you want to analyze in the input parameter, and supply the block with an API key.

Parameters: input = text, apiKey = text

ModeratedText

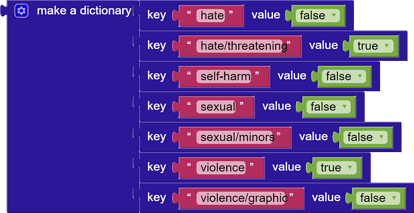

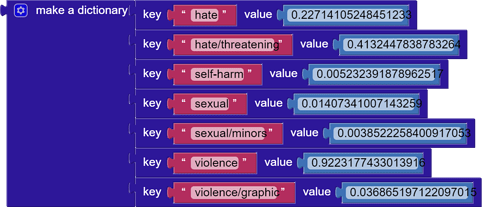

This event is fired when OpenAI has moderated the text you inputted in the ModerateText block! 'flagged' refers to whether the text contains indecent information in general.

categoryFlagsincludes a dictionary of 7 types of inappropriate information (hate, hate/threatening, self-harm, sexual, sexual/minors, violence, violence/graphic). These 7 are the keys of the dictionary. Corresponding to them are boolean values for whether the text has this type of inappropriate information. Sample response:

categoryScoresincludes the same 7 types as the keys, but corresponding to them are decimals from 0 to 1. Values closer to 0 mean that the text violates less in this category; vice versa.Parameters: flagged = boolean, categoryFlags = dictionary, categoryScores = dictionary

I will update the entire documentation tomorrow morning.

Hello! Is there an option for me to make it automatically check something about the system and then get the tokens I have spent, because my friends could also just reach the limit of my account and then I would need to pay for it?

I see that a Python library does this but I can't find anything else about the topic. I will find out. Thanks!

Gordon, thank you wery much for this. Great work!

Is there a way to use system role with it's dedicated content that would be predefined (not for mobile app end users to change)?. I am refering to Chat option, and I see that currently only user role is supported.

Thanks

Implementing the system role is possible with a modification to the block. Thanks!

Do you have some plans do develope this, or how can I help you develop this?

I can add this in a new release along with the stream feature.

Are you using the iOS companion? You cannot use extensions on iOS.

Yeah... So, just so we're clear, if I'm using an Android, is it supposed to work? Thanks btw :))

It should.

Cool, thanks :DD

B1 not working .

error : model not found

Please show your blocks.