Hi! I have this weird problem with the timer.

I'll describe the program.

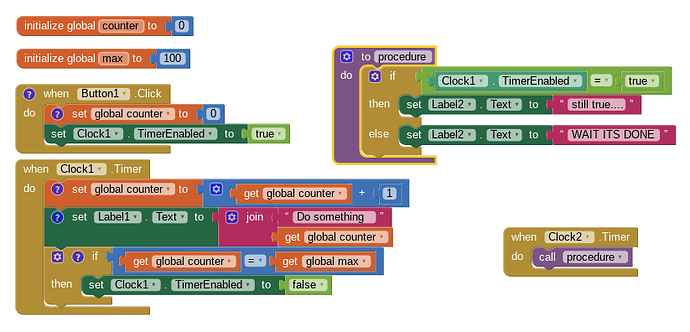

Here is a screenshot.

Both timers are set to always fire,

Timer 2 is set to enabled,

I will be changing timer 1's time,

And timer 2 is always set to 1ms (I just wanted that to always run and that's what I came up with)

Ok onto the problem

The problem is that, whenever I set lower times for timer 1 (from 1000, 100, and 10ms) it seems it takes a bit of time to register... or something. Let me explain with an example.

When I set global max to 10, and timer 1 to 1000ms it takes 10 seconds for it to stop

But when i set global max to 100, and timer 1 to 100ms... it should take the same time right?

Well what I have happened is that it goes a little bit over. Around 11 seconds.

And when I set global max to 1000 and timer 1 to 10 ms it's even worse.. taking around 20 seconds to complete instead of 10.

Maybe I'm doing my math wrong... but I'm pretty sure that it's not supposed to work like that.

Any explanation or help is appreciated! Thank you!