Hallo,

I am an engineering undergraduate student, working on a research dissertation which detects objects on the ground. I am using the “Look” extension in MIT inventor, and had some questions:

Is there a way of accessing and training the database which the “look function” works, where I can train it to look specific things such as a brick, a wheel, animal or human walking, etc ? This is in order to categorise these.

If not, is there a way of inputting more objects into the system or link other databases to this function ?

Is there a way of passing parameters to the database via the function ?

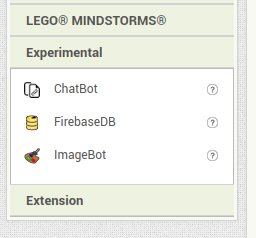

Are there similar/alternative functions I could use ?

In addition, is there a way of linking ChatGPT to MIT inventor ?

Thank in advance for any help you can provide me.

Best wishes,

Victor