1. Overview

The AwsRekognition extension integrates AWS's advanced face recognition capabilities into your application. It allows creating and managing collections, indexing faces, and searching for matches within these collections. The extension supports image comparison, providing detailed metadata and similarity scores for detected faces. Users can associate faces with specific user IDs and manage these associations effectively. It offers event-driven responses for real-time face recognition tasks. With this extension, developers can effortlessly add powerful facial recognition features to their apps. Perfect for building applications requiring robust and accurate face recognition functionality.

Latest Version: 1

Published: 2024-08-07T18:30:00Z

Last Updated: 2024-08-07T18:30:00Z

Aix size: 335 Kb

Sponsored by @3dmixer ![]()

![]()

2. Blocks

3. Documentation

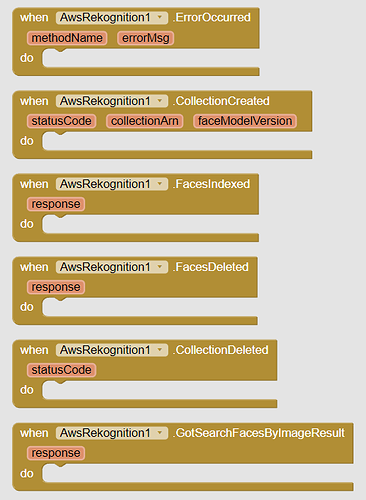

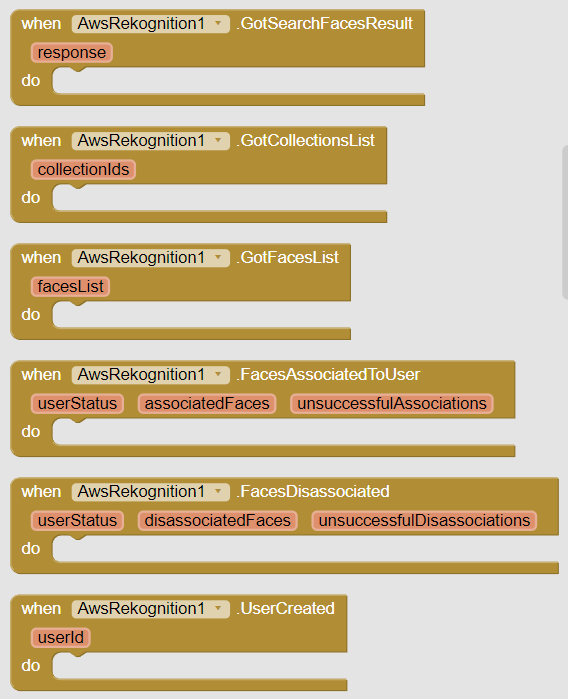

Events

| ErrorOccurred | Event raised when an error occurs during recognition operations or internal operationmethodName | texterrorMsg | text |

| CollectionCreated | Event raised after creating collectionstatusCode | numbercollectionArn | textfaceModelVersion | text |

| FacesIndexed | Event raised after getting index faces operation responseresponse | text |

| FacesDeleted | Event raised getting face deletion operation responseresponse | text |

| CollectionDeleted | Event raised after deleting collectionstatusCode | number |

| GotSearchFacesByImageResult | Event raised after getting SearchFacesByImage resultresponse | text |

| GotSearchFacesResult | Event raised after getting SearchFaces resultresponse | text |

| GotCollectionsList | Event raised after getting list of collections.collectionIds | list |

| GotFacesList | Event raised after getiing faces list. Each list item is json text representing face details.facesList | list |

| FacesAssociatedToUser | Event raised after associating faceIds to User.userStatus | textassociatedFaces | listunsuccessfulAssociations | list |

| FacesDisassociated | Event raised after disassociating face from user. disassociatedFaces list contains face ids which were successfully disassociated. unsuccessfulDisassociations list contains face ids which could not be disassociated due to various reasons.userStatus | textdisassociatedFaces | listunsuccessfulDisassociations | list |

| UserCreated | Event raised after creating user. Returns user id.userId | text |

| UserDeleted | Event raised after deleting user iduserId | text |

| GotUsersList | Event raised after getting users listusersList | list |

| GotComparisonResult | Event raised after getting getting face comparison resultresponse | text |

Methods

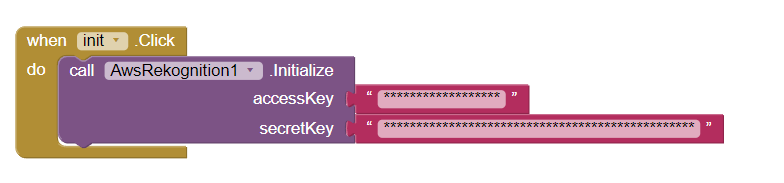

| Initialize | Initializes AWS Rekognition SDKaccessKey | textsecretKey | text |

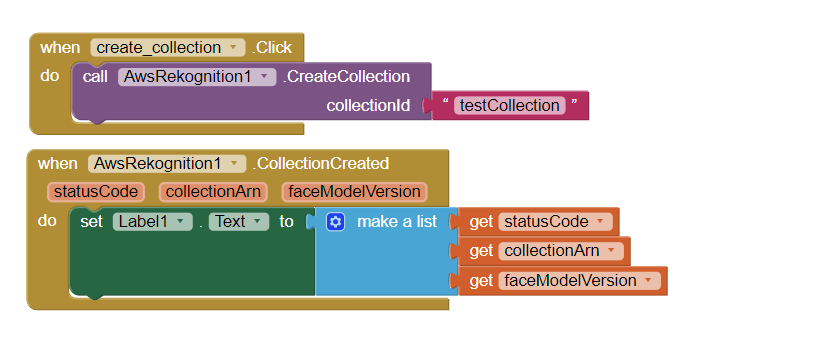

| CreateCollection | Creates a collection in an AWS Region. You can add faces to the collection using the IndexFaces operation.

For example, you might create collections, one for each of your application users. A user can then index faces using the IndexFaces operation and persist results in a specific collection. Then, a user can search the collection for faces in the user-specific container. When you create a collection, it is associated with the latest version of the face model version. |

| IndexFaces | Detects faces in the input image and adds them to the specified collection.

Amazon Rekognition doesn't save the actual faces that are detected. Instead, the underlying detection algorithm first detects the faces in the input image. For each face, the algorithm extracts facial features into a feature vector, and stores it in the backend database. Amazon Rekognition uses feature vectors when it performs face match and search operations using the SearchFaces and SearchFacesByImage operations.If you provide the optional ExternalImageId for the input image you provided, Amazon Rekognition associates this ID with all faces that it detects. When you call the ListFaces operation, the response returns the external ID. You can use this external image ID to create a client-side index to associate the faces with each image. You can then use the index to find all faces in an image. You can specify the maximum number of faces to index with the MaxFaces input parameter. This is useful when you want to index the largest faces in an image and don't want to index smaller faces, such as those belonging to people standing in the background. The QualityFilter input parameter allows you to filter out detected faces that don’t meet a required quality bar. The quality bar is based on a variety of common use cases. By default, IndexFaces chooses the quality bar that's used to filter faces. You can also explicitly choose the quality bar. Use QualityFilter, to set the quality bar by specifying LOW, MEDIUM, or HIGH. If you do not want to filter detected faces, specify NONE. |

| DeleteFaces | Deletes faces from a collection. You specify a collection ID and a list (max 4096 items) of face IDs to remove from the collection.collectionId | textfaceIds | list |

| DeleteCollection | Deletes the specified collection. Note that this operation removes all faces in the collection.collectionId | text |

| SearchFacesByImage | For a given input image, first detects the largest face in the image, and then searches the specified collection for matching faces. The operation compares the features of the input face with faces in the specified collection.

Note: To search for all faces in an input image, you might first call the IndexFaces operation, and then use the face IDs returned in subsequent calls to the SearchFaces operation. You pass the input image either as base64-encoded string or file path. The image must be either a PNG or JPEG formatted file. The response returns a list of faces that match, ordered by similarity score with the highest similarity first. More specifically, it is a list of metadata for each face match found. Along with the metadata, the response also includes a similarity indicating how similar the face is to the input face. In the response, the operation also returns the bounding box (and a confidence level that the bounding box contains a face) of the face that Amazon Rekognition used for the input image. |

| SearchFaces | For a given input face ID, searches for matching faces in the collection the face belongs to. You get a face ID when you add a face to the collection using the IndexFaces operation. The operation compares the features of the input face with faces in the specified collection. The operation response returns a list of faces that match, ordered by similarity score with the highest similarity first. More specifically, it is a list of metadata for each face match that is found. Along with the metadata, the response also includes a confidence value for each face match, indicating the confidence that the specific face matches the input face. collectionId | textfaceId | textmaxFaces | numberconfidenceThreshold | number |

| ListCollections | Fetches list of collection IDs in your account. |

| ListFaces | Fetches list of faces and metadata for faces in the specified collection. This metadata includes information such as the bounding box coordinates, the confidence (that the bounding box contains a face), and face ID. If UserId is empty then all faces all returned, otherwise only faces associated with UserId are returned.collectionId | textuserId | textmaxResults | number |

| AssociateFaces | Associates one or more faces with an existing UserID. Takes a list of FaceIds. Each FaceId that are present in the FaceIds list is associated with the provided UserID. The number of FaceIds that can be used as input in a single request is limited to 100.

Note that the total number of faces that can be associated with a single UserID is also limited to 100. Once a UserID has 100 faces associated with it, no additional faces can be added. If successful, an array of AssociatedFace objects containing the associated FaceIds is returned. If a given face is already associated with the given UserID, it will be ignored and will not be returned in the response. If a given face is already associated to a different UserID, isn't found in the collection, doesn’t meet the UserMatchThreshold, or there are already 100 faces associated with the UserID, it will be returned as part of UnsuccessfulAssociations list. The UserStatus reflects the status of an operation which updates a UserID representation with a list of given faces. The UserStatus can be: ACTIVE - All associations or disassociations of FaceID(s) for a UserID are complete. CREATED - A UserID has been created, but has no FaceID(s) associated with it. UPDATING - A UserID is being updated and there are current associations or disassociations of FaceID(s) taking place. |

| DisassociateFaces | Removes the association between Faces supplied in list of FaceIds and the User. If the User is not present already, error is thrown. If successful, list of faces that are disassociated from the User is returned. If a given face is already disassociated from the given UserID, it will be ignored and not be returned in the response. If a given face is already associated with a different User or not found in the collection it will be returned as part of UnsuccessfulDisassociations. You can remove 1 - 100 face IDs from a user at one time.collectionId | textfaceIds | listuserId | text |

| CreateUser | Creates a new User within a collection specified by CollectionId. Takes UserId as a parameter, which is a user provided ID which should be unique within the collection. The provided UserId will alias the system generated UUID to make the UserId more user friendly.userId | text |

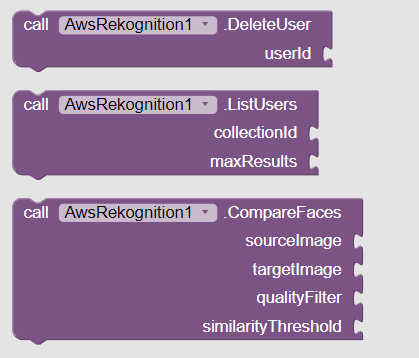

| DeleteUser | Deletes the specified UserID within the collection. Faces that are associated with the UserID are disassociated from the UserID before deleting the specified UserID.userId | text |

| ListUsers | Fetches list of users and metadata of the User such as UserID in the specified collection. Anonymous User (to reserve faces without any identity) is not returned as part of this request. The results are sorted by system generated primary key ID.collectionId | textmaxResults | number |

| CompareFaces | Compares a face in the source input image with each of the 100 largest faces detected in the target input image.If the source image contains multiple faces, the service detects the largest face and compares it with each face detected in the target image.In response, the operation returns an array of face matches ordered by similarity score in descending order. For each face match, the response provides a bounding box of the face, facial landmarks, pose details (pitch, roll, and yaw), quality (brightness and sharpness), and confidence value (indicating the level of confidence that the bounding box contains a face). The response also provides a similarity score, which indicates how closely the faces match.sourceImage | texttargetImage | textqualityFilter | textsimilarityThreshold | number |

4. Usages

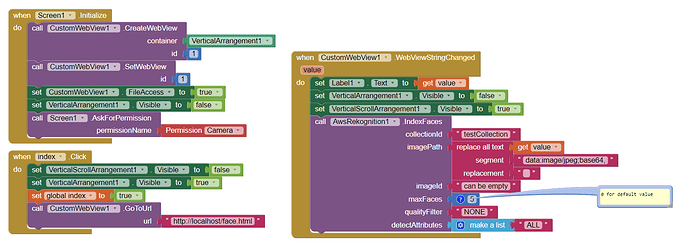

First of all, initialize AWS Sdk.

Now, create a collection.

Index faces in Image and add to the collection

You can provide file path, content uri and base64 string.

I will use webview to get base64 data for example. You can use Camera or pick a file using SAF / File Picker.

detectAttributes:

DEFAULT , ALL , AGE_RANGE , BEARD , EMOTIONS , EYE_DIRECTION , EYEGLASSES , EYES_OPEN , GENDER , MOUTH_OPEN , MUSTACHE , FACE_OCCLUDED , SMILE , SUNGLASSES

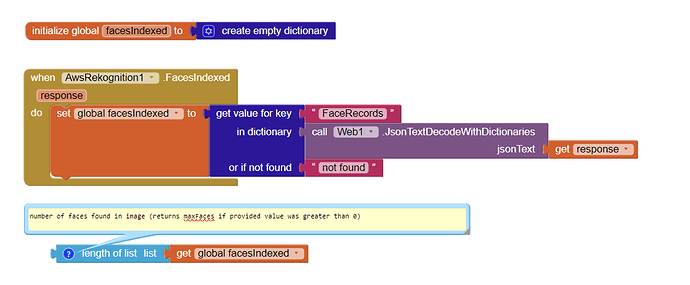

Handle Index Faces operation response

Response Elements

{

"FaceModelVersion": "string",

"FaceRecords": [

{

"Face": {

"BoundingBox": {

"Height": number,

"Left": number,

"Top": number,

"Width": number

},

"Confidence": number,

"ExternalImageId": "string",

"FaceId": "string",

"ImageId": "string",

"IndexFacesModelVersion": "string",

"UserId": "string"

},

"FaceDetail": {

"AgeRange": {

"High": number,

"Low": number

},

"Beard": {

"Confidence": number,

"Value": boolean

},

"BoundingBox": {

"Height": number,

"Left": number,

"Top": number,

"Width": number

},

"Confidence": number,

"Emotions": [

{

"Confidence": number,

"Type": "string"

}

],

"EyeDirection": {

"Confidence": number,

"Pitch": number,

"Yaw": number

},

"Eyeglasses": {

"Confidence": number,

"Value": boolean

},

"EyesOpen": {

"Confidence": number,

"Value": boolean

},

"FaceOccluded": {

"Confidence": number,

"Value": boolean

},

"Gender": {

"Confidence": number,

"Value": "string"

},

"Landmarks": [

{

"Type": "string",

"X": number,

"Y": number

}

],

"MouthOpen": {

"Confidence": number,

"Value": boolean

},

"Mustache": {

"Confidence": number,

"Value": boolean

},

"Pose": {

"Pitch": number,

"Roll": number,

"Yaw": number

},

"Quality": {

"Brightness": number,

"Sharpness": number

},

"Smile": {

"Confidence": number,

"Value": boolean

},

"Sunglasses": {

"Confidence": number,

"Value": boolean

}

}

}

],

"OrientationCorrection": "string",

"UnindexedFaces": [

{

"FaceDetail": {

"AgeRange": {

"High": number,

"Low": number

},

"Beard": {

"Confidence": number,

"Value": boolean

},

"BoundingBox": {

"Height": number,

"Left": number,

"Top": number,

"Width": number

},

"Confidence": number,

"Emotions": [

{

"Confidence": number,

"Type": "string"

}

],

"EyeDirection": {

"Confidence": number,

"Pitch": number,

"Yaw": number

},

"Eyeglasses": {

"Confidence": number,

"Value": boolean

},

"EyesOpen": {

"Confidence": number,

"Value": boolean

},

"FaceOccluded": {

"Confidence": number,

"Value": boolean

},

"Gender": {

"Confidence": number,

"Value": "string"

},

"Landmarks": [

{

"Type": "string",

"X": number,

"Y": number

}

],

"MouthOpen": {

"Confidence": number,

"Value": boolean

},

"Mustache": {

"Confidence": number,

"Value": boolean

},

"Pose": {

"Pitch": number,

"Roll": number,

"Yaw": number

},

"Quality": {

"Brightness": number,

"Sharpness": number

},

"Smile": {

"Confidence": number,

"Value": boolean

},

"Sunglasses": {

"Confidence": number,

"Value": boolean

}

},

"Reasons": [ "string" ]

}

]

}

Once face is indexed, you can create a user (if not already created) and associate face with user id. You can search faces by image or search faces similar to face id.

You can also compare faces in two images.

5. Purchase Extension

6. Additional Information

You can setup aws account with the help of this guide.

Thank you.

Hope it helps!