Would be good to have the full setup instructions for nocoDB with docker on a vps (assume a linux server....) ![]()

Yes....

A howto would be good, I know it is a bit off topic, but it would help others (me too) to get nocoDB setup correctly on a server, in order to use it with AI2.

I have a number of blog posts detailing the steps of setting up docker and docker compose on a Ubuntu VPS.

Before continuing, I would like to point out that Peter's instance isn't set up the same way as described in this tutorial. But I think the below setup is much easier for most people to get started using NocoDB.

Next, you will need to create a docker-compose.yml file and paste in the following content:

version: '2'

services:

nginx-proxy:

image: nginxproxy/nginx-proxy

container_name: nginx-proxy

ports:

- "80:80"

- "443:443"

volumes:

- conf:/etc/nginx/conf.d

- vhost:/etc/nginx/vhost.d

- html:/usr/share/nginx/html

- dhparam:/etc/nginx/dhparam

- certs:/etc/nginx/certs:ro

- /var/run/docker.sock:/tmp/docker.sock:ro

acme-companion:

image: nginxproxy/acme-companion

container_name: nginx-proxy-acme

volumes_from:

- nginx-proxy

volumes:

- certs:/etc/nginx/certs:rw

- acme:/etc/acme.sh

- /var/run/docker.sock:/var/run/docker.sock:ro

db:

image: mysql:5.7

container_name: nocodb-db

volumes:

- nocodb-db:/var/lib/mysql

environment:

- MYSQL_RANDOM_ROOT_PASSWORD=yes

- MYSQL_DATABASE=nocodb

- MYSQL_USER=nocodb

- MYSQL_PASSWORD=password

healthcheck:

test: [ "CMD", "mysqladmin" ,"ping", "-h", "localhost" ]

timeout: 20s

retries: 10

nocodb:

depends_on:

db:

condition: service_healthy

image: nocodb/nocodb:latest

container_name: nocodb

environment:

- VIRTUAL_HOST=noco.example.com

- VIRTUAL_PORT=8080

- LETSENCRYPT_HOST=noco.example.com

- LETSENCRYPT_EMAIL=admin@example.com

- NC_DB=mysql2://db:3306?u=nocodb&p=password&d=nocodb

- NC_PUBLIC_URL=https://noco.example.com

volumes:

conf:

vhost:

html:

dhparam:

certs:

acme:

nocodb-db:

For this set up to work, multiple settings have to be changed. You need to make sure you have a DNS entry pointing to your VPS and to replace all occurrences of noco.example.com with your own domain name. Also don't forget to replace admin@example.com with your own email address and to replace to database password with a secure password.

Finally, we can execute Docker Compose to deploy the website.

$ docker-compose up -d

You can now visit NocoDB at https://noco.example.com/dashboard to finish the set up process.

I hope this makes things more clear. If not, feel free to ask any additional questions.

I just found this script that does almost exactly what I do in my previous post. You can run it using the following command:

$ curl https://raw.githubusercontent.com/nocodb/nocodb/master/docker-compose/letsencrypt/nc.sh | bash

Thanks @bartmathijssen

A couple of things:

My vps is running ubuntu 18.04 LTS (no option to update at the moment), any differences to docker and docker compose installation ? [EDIT] - I have had a look around and instructions for 18.04 appear to be the same (e.g. https://phoenixnap.com/kb/how-to-install-docker-on-ubuntu-18-04 / https://phoenixnap.com/kb/install-docker-compose-ubuntu)

I see mention of nginx, is this "inside" docker, because my http server is apache ?

You are correct, the Docker and Docker Compose installation should be the same for Ubuntu 18.04.

In my example, nginx is ran inside a Docker container. This container generates a proxy for the containers running on that host. The acme-companion container generates Let's Encrypt certificates for the desired domain names.

You could use Apache to redirect to the Docker container. In this case you can remove the nginx-proxy and acme-companion containers from the docker-compose file.

Docker and Docker-Compose successfully installed on 18.04 ![]()

Where to create this file ? I see from the docker docs that they say:

The default path for a Compose file is ./docker-compose.yml

This is OK as my logged in sudo user ?

Also I will probably need to use a non standard port, do I just change VIRTUAL_PORT= 8080 in the config/yml file?

Well, I put docker-compose.yml in my home folder, and edited as suggested, and changed VIRTUAL_PORT to 8088 and setup my firewall to allow that.

Running

docker-compose up -d

generates the following error:

Named volume "acme:/etc/acme.sh:rw" is used in service "acme-companion" but no declaration was found in the volumes section

Seems the yml file is being found but docker unable to handle this acme command.

Additional info:

I am using the DNS name provided by my host for the server

This already has an SSL certificate from LetsEnrypt running on it

[EDIT]

Removed the nginx and acme sections from the yml file. This has worked, happy command line, nocodb up to date.

Now I need to configure Apache -

Apache commands:

sudo nano /etc/apache2/sites-available/Apache2Proxy.conf

sudo a2enmod proxy

sudo a2enmod proxy_http

sudo a2ensite Apache2Proxy.conf

sudo systemctl restart apache2.service

sudo docker-compose up -d

Apache2Proxy.conf contains:

<VirtualHost *:80>

ServerName example.com ## changed to match yml file

ServerAlias www.example.com ## changed to match yml file

ServerAdmin webmaster@example.com

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

ProxyRequests Off

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

ProxyPass / http://127.0.0.1:8088/

ProxyPassReverse / http://127.0.0.1:8088/

<Location />

Order allow,deny

Allow from all

</Location>

</VirtualHost>

Hmmm - webpage shows "503 Service Unavailable"

Port 8080 is the port the application is running on, inside the container.

The named volume exception is my fault. I forgot to copy all volumes defined in the volumes section in the docker-compose file. The original post has now been changed to have the acme volume.

The VIRTUAL_HOST environment variable tells the nginx-proxy container which port to forward traffic to. But because you have removed the nginx containers, these environment variables have become redundant. I haven't tested this, but this configuration should be sufficient:

version: '2'

services:

db:

image: mysql:5.7

container_name: nocodb-db

volumes:

- nocodb-db:/var/lib/mysql

environment:

- MYSQL_RANDOM_ROOT_PASSWORD=yes

- MYSQL_DATABASE=nocodb

- MYSQL_USER=nocodb

- MYSQL_PASSWORD=password

healthcheck:

test: [ "CMD", "mysqladmin" ,"ping", "-h", "localhost" ]

timeout: 20s

retries: 10

nocodb:

depends_on:

db:

condition: service_healthy

image: nocodb/nocodb:latest

container_name: nocodb

environment:

- NC_DB=mysql2://db:3306?u=nocodb&p=password&d=nocodb

- NC_PUBLIC_URL=https://noco.example.com

volumes:

nocodb-db:

The proxy has to point to the NocoDB container:

ProxyPass / http://nocodb:8080/

ProxyPassReverse / http://nocodb:8080/

Apache still not playing nicely

setting http://nocodb:8080/

Proxy Error

The proxy server received an invalid response from an upstream server.

The proxy server could not handle the request

Reason: DNS lookup failure for: nocodb

I may backtrack and try with nginx again....

Backtracked and tried original yml file, all goes well until:

ERROR: for nginx-proxy Cannot start service nginx-proxy: driver failed programming external connectivity on endpoint nginx-proxy (f6f3b7ffb4c3568280470f27407a4bd54cdcee23d3edaa60bdcc3ed6cf2ec037): Error starting userland proxy: listen tcp4 0.0.0.0:443: bind: address already in use

ERROR: Encountered errors while bringing up the project.

I stopped apache and ran docker-compose again and this time it worked, but nginx gate me a 502 Bad gateway on http (no reponse on https)

Seems I am destined to fail here.

I also tried with npm/npx but that also failed, so have admitted defeat and restored my server to before all of this.

That's weird. I don't use Apache for my services, but looking at examples online changing the proxy to point to http://nocodb:8080/ should have worked. I'm not sure what is causing the 502 Bad Gateway, you can view the logs of your docker deployment using docker-compose logs -f. If you don't mind sharing them, I can take a look.

Thanks Bart, as I said above, I have moved on, this one is not for me so it seems, or my server setup is such that there is too much to undo to make it work. I might have another go when I am in the mood. Thanks for all your help and guidance, hopefully it will assist others to get setup correctly.

To bad you couldn't get it to work.

I did a 1-Click Deploy and that is working to. Great for testing.

Playing with NocoDB. I like it very much.

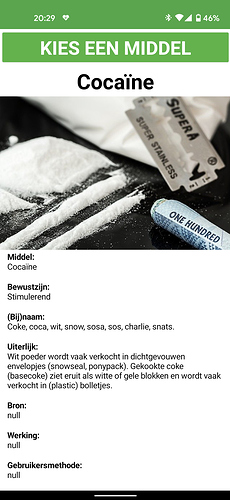

Made a small Dutch Drugs information app.

As you can see not all fields have information that is why it displays a null.

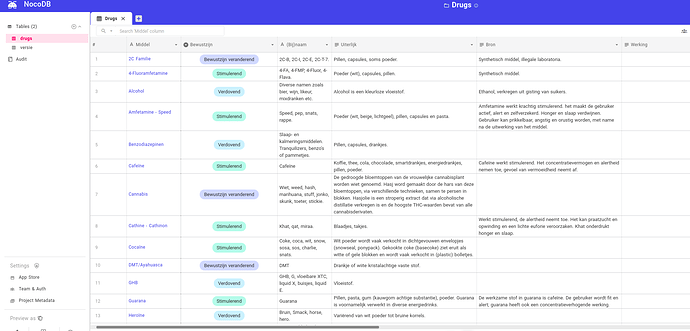

Below part of the database

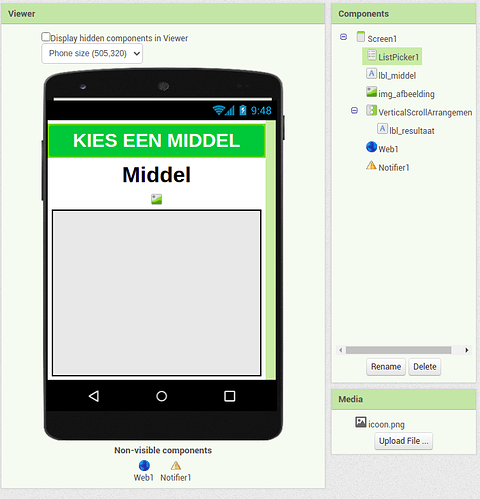

Here my design

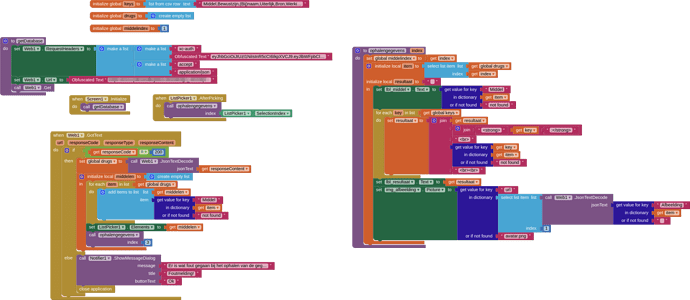

And here my blocks.

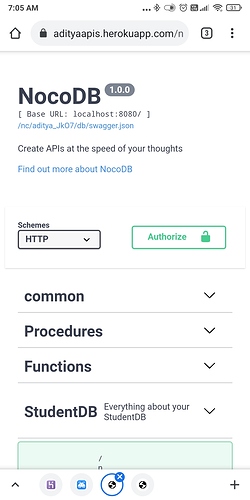

I guess you can not. Your url has to look something like

http://appname.herokuapp.com/nc/test_R9-9/api/v1/test

You can generate your url even from the Swagger API Doc

Then you have to replace the localhost bit piece with your url

In my example app the bit after the domain name looks like

nc/drugs_xScL/api/v1/drugs?fields=Middel%2CBewustzijn%2C(Bij)naam%2CUiterlijk%2CBron%2CWerking%2CGebruikersmethode%2CWerkingsduur%2CRisico's%2CWisselwerking%2CWet%2CMarktprijs%2CGewenning%2CAfhankelijkheid%2CAantoonbaar%20in%20urine%2C%20Afbeelding&limit=100