Please see the updated extension Groq- [PAID] 🤖 Groq Extension- Interact with multiple LLMs with Agentic Capabilities and MCP Servers- LLMs, TTS & STT all in one extension - #10 by sol_roll

Old Post

🧩 GroqText

An extension for MIT App Inventor 2.Extension to integrate AI Text and Vision Models in applications using Groq API with streaming support. My other extensions

Built by Sarthak Gupta

Specifications

Specifications

![]() Package: com.sarthakdev.groqtext

Package: com.sarthakdev.groqtext

![]() Size: 20.68 KB

Size: 20.68 KB

![]() Version: 1.2

Version: 1.2

![]() Minimum API Level: 7

Minimum API Level: 7

![]() Updated On: 2025-05-25T18:30:00Z

Updated On: 2025-05-25T18:30:00Z

![]() Built & documented using: FAST-CLI

Built & documented using: FAST-CLI v2.8.1

Extension License: here

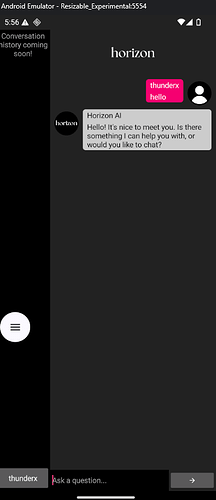

Introduction

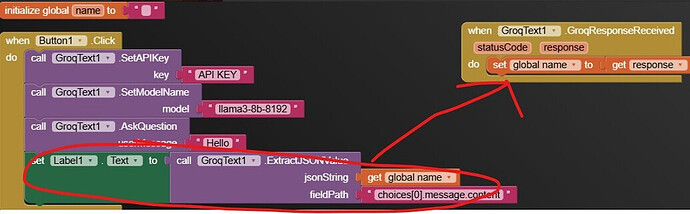

- Integrate a large number of AI Models in your app through the groq api

- Includes a generous free plan with daily rate limit (without credit card)

- Includes 2b, 8b and 70b parameter models

Features of Groq Inference

- Lightning fast AI Models

- Supports 30+ AI Models from 5+ providers

- Best Free Plan that gives 500k tokens* daily for free to use in production

Events:

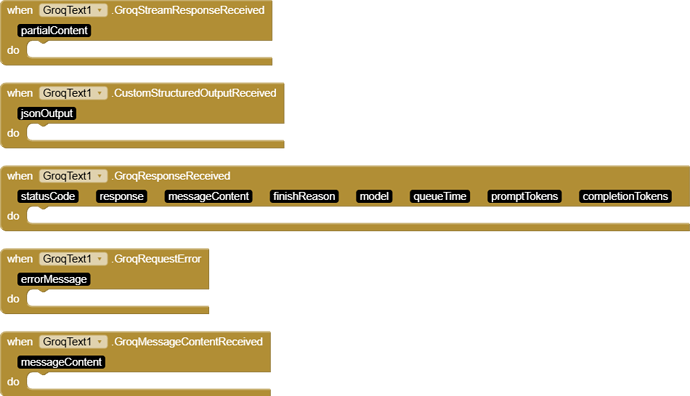

GroqText has total 5 events.

GroqStreamResponseReceived

GroqStreamResponseReceived

Event triggered when a streaming response part is received

| Parameter | Type |

|---|---|

| partialContent | text |

CustomStructuredOutputReceived

CustomStructuredOutputReceived

Event triggered when custom structured output is received from the Groq API

| Parameter | Type |

|---|---|

| jsonOutput | text |

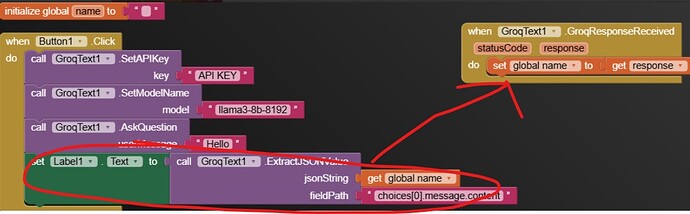

GroqResponseReceived

GroqResponseReceived

Event triggered when AI response is received

| Parameter | Type |

|---|---|

| statusCode | number |

| response | text |

| messageContent | text |

| finishReason | text |

| model | text |

| queueTime | text |

| promptTokens | text |

| completionTokens | text |

GroqRequestError

GroqRequestError

Event triggered when an error occurs in Groq API request

| Parameter | Type |

|---|---|

| errorMessage | text |

GroqMessageContentReceived

GroqMessageContentReceived

Event triggered when AI message content is extracted

| Parameter | Type |

|---|---|

| messageContent | text |

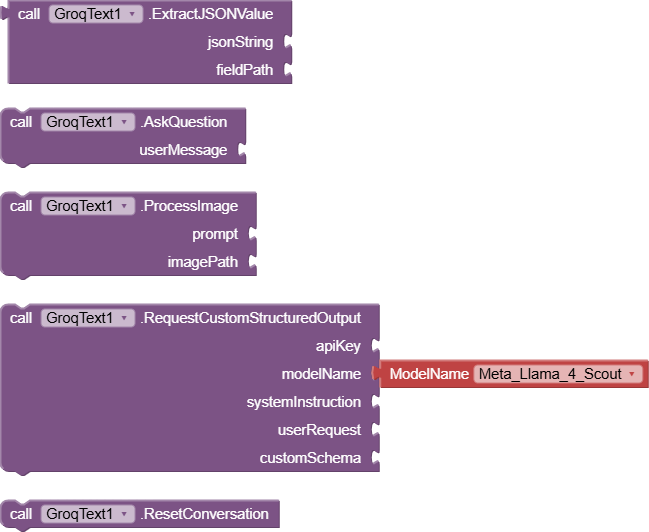

Methods:

GroqText has total 5 methods.

ExtractJSONValue

ExtractJSONValue

Extract a specific value from the JSON response

| Parameter | Type |

|---|---|

| jsonString | text |

| fieldPath | text |

AskQuestion

AskQuestion

Ask a question to the AI

| Parameter | Type |

|---|---|

| userMessage | text |

ProcessImage

ProcessImage

Process an image with a text prompt using a local image path

| Parameter | Type |

|---|---|

| prompt | text |

| imagePath | text |

RequestCustomStructuredOutput

RequestCustomStructuredOutput

Request custom structured output from Groq API

| Parameter | Type |

|---|---|

| apiKey | text |

| modelName | text |

| systemInstruction | text |

| userRequest | text |

| customSchema | text |

ResetConversation

ResetConversation

Reset the conversation by clearing the chat history

Setters:

GroqText has total 11 setter properties.

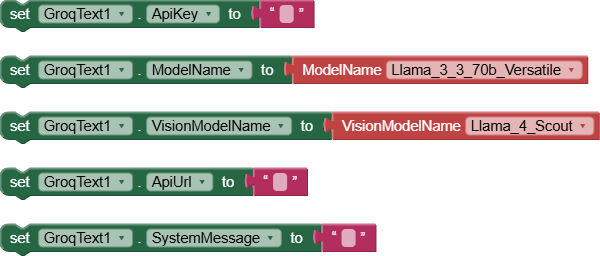

ApiKey

ApiKey

Set the Groq API Key

- Input type:

text

ModelName

ModelName

Set the AI Model Name for text tasks

- Input type:

text - Helper class:

ModelName - Helper enums:

Llama_3_3_70b_Versatile,Llama_3_1_8b_Instant,Llama3_70b_8192,Llama3_8b_8192,Gemma2_9b_It,Meta_Llama_Llama_Guard_4_12B,Allam_2_7b,DeepSeek_R1_Distill_Llama_70b,Meta_Llama_4_Maverick,Meta_Llama_4_Scout,Mistral_Saba_24b,Qwen_Qwq_32b,Compound_Beta,Compound_Beta_Mini

VisionModelName

VisionModelName

Set the AI Model Name for vision tasks

- Input type:

text - Helper class:

VisionModelName - Helper enums:

Llama_4_Scout,Llama_4_Maverick

ApiUrl

ApiUrl

Set the API Endpoint URL

- Input type:

text

SystemMessage

SystemMessage

Set the system message for the AI

- Input type:

text

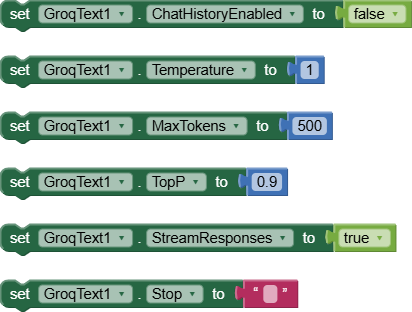

ChatHistoryEnabled

ChatHistoryEnabled

Enable or disable chat history

- Input type:

boolean

Temperature

Temperature

Set the temperature

- Input type:

number

MaxTokens

MaxTokens

Set the max tokens

- Input type:

number

TopP

TopP

Set the top P value

- Input type:

number

StreamResponses

StreamResponses

Set whether to stream responses for text and vision tasks

- Input type:

boolean

Stop

Stop

Set the stop value

- Input type:

text

Getters:

GroqText has total 11 getter properties.

ApiKey

ApiKey

Set the Groq API Key

- Return type:

text

ModelName

ModelName

Set the AI Model Name for text tasks

- Return type:

text

VisionModelName

VisionModelName

Set the AI Model Name for vision tasks

- Return type:

text

ApiUrl

ApiUrl

Set the API Endpoint URL

- Return type:

text

SystemMessage

SystemMessage

Set the system message for the AI

- Return type:

text

ChatHistoryEnabled

ChatHistoryEnabled

Enable or disable chat history

- Return type:

boolean

Temperature

Temperature

Set the temperature

- Return type:

number

MaxTokens

MaxTokens

Set the max tokens

- Return type:

number

TopP

TopP

Set the top P value

- Return type:

number

StreamResponses

StreamResponses

Set whether to stream responses for text and vision tasks

- Return type:

boolean

Stop

Stop

Set the stop value

- Return type:

text

Try the extension for free with GroqTextMini (Free)

This is the difference b/w free and paid version.

| GroqTextMini | GroqText |

|---|---|

| Free | Paid(5.99$) |

| Use llama-8b model | Use 30+ AI Models (Lllama, Gemma, Mixtral, DeepSeek, Qwen, Distilled models) |

| 8b model | 1b, 2b, 3b, 8b, 32b, 70b, 80b models |

| 500 tokens | Unlimited tokens depending on model capacity |

| No Image Support | Image Support |

| No Code Execution | Code Execution Support |

| No Search Support | Search Support |

GroqTextMini: ![]() com.sarthakdev.groqtextmini.aix (8.3 KB)

com.sarthakdev.groqtextmini.aix (8.3 KB)

Purchase full GroqText extension from here for only 5.99$

Purchase Extension

You can purchase the extension instantly from the link below for just 5.99$

https://buymeacoffee.com/techxsarthak/e/356706