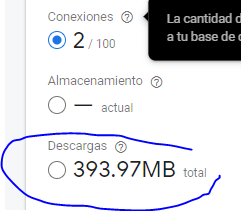

I've been doing some test sending data to my account in firebase and taking measurements about how many Kb per letter I send including name of any variable, TAG and list name. After a 87,349 chain test the result was 17 Byte in firebase per Character I send while I am connected as AI Companion in the cellphone. The problem start when I generate the APP and start the same test in the same cellphone once is generated and the consume increase 20.97 times, so now I need 356Bytes per character I send using the same APP but generated. What I need to do to have the same result in the test mode in AI2 via AI Companion and in the APP generated ?

Please show your relevant blocks / firebase console data / etc.

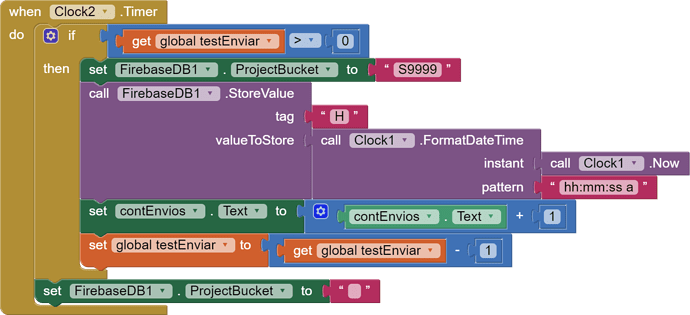

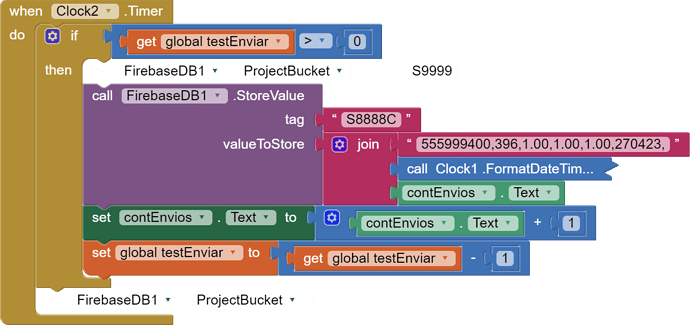

I simplify the code to prove the same result as in my original code (the original code have 7345 blocks) and in both codes I get the same.

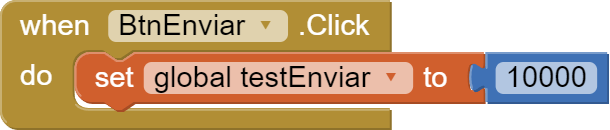

In this test I send 10,000 chains acording to NOW clock time each 100ms. When I try this code using AI Companion the result is 0.088Kb per chain and when I use it once I generate the App is 10Kb per chain.

In resume first test consume 0.88Mb in total

second test consume 102.54Mb

I'm not even going to ask why you are doing what you are doing... there must be some reason....

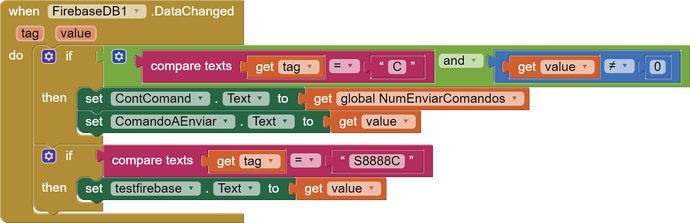

You do not show how you download the data you are uploading, in order to generate your statistics (also, how are you doing this?)

These test are to check the best way to read sensors and keep several Apps open without spend a lot of Mb. Normally I receive more than 200,000 values per day and I need to show the Graph and so much more. Today I change some functions and erase every ProjectBucket and also modify the database so now I spend 80Bytes per chain of 45 characters and need 84Bytes per chain readed using the function DataChanged from firebase. If I use the Funtion GotValue increase at least 4 times the data use in firebase. Now its solved the problem. At the begining I need 301.79Mb per day and now with these movements a need 21.73Mb. I was looking for someone that already do something like these before.