Many thanks for your input,

Ball - See response to ewpatton.

SpeechRecognition - See my previous reply clarifying this, which concludes that the issue is with Gboard and the Android version. If Huawei release an upgrade to Android 9 we will see, but as I said in previous reply I don’t think controlling my App with speech is as efficient as ball on canvas finger pad/joystick, but I like to try all options

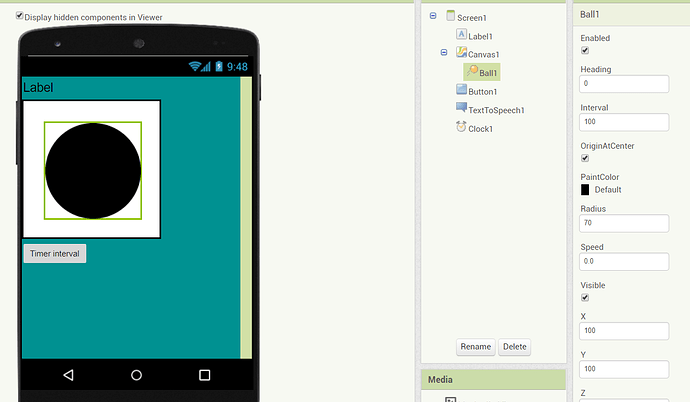

Hmmm, just got rid of what was on Screen1 as I am definitely going to be using ball on canvas rather than buttons.

I created a label, canvas and ball with centreorigin checked and events for dragged and edgereached and it woks as expected - on my mobile.

I have a hunch - I am using the app on my tablet in split screen mode, using 1/4 of the screen. The ball moves OK but I’m wondering whether this is affecting the position. I will have an experiment with sizes, using pixels, percentage, automatic etc. and report back

UPDATE and apologies for wasting your time, it’s the timer that’s causing it so I need to sort that

No worries. I'm glad you were able to identify the underlying problem.

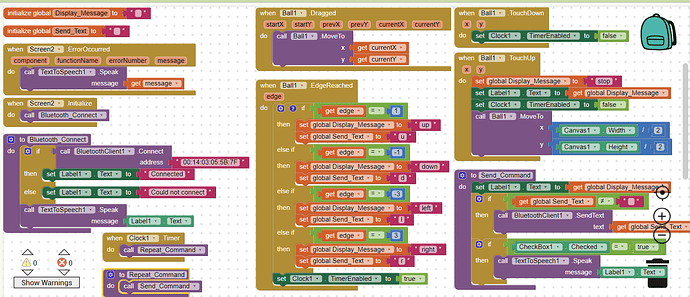

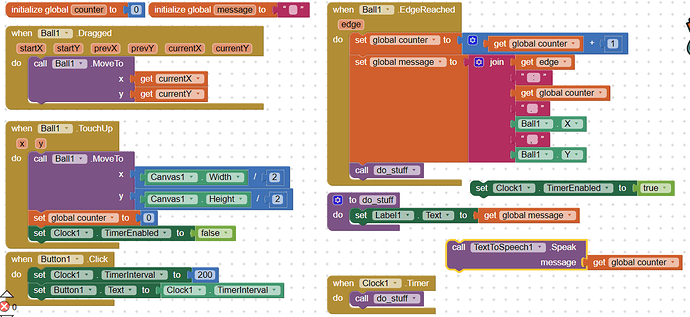

Okay! I have this working acceptably well but more by luck than judgement and I like to understand what I’m doing.

I have created a simple ball on canvas with timer, so it now looks like the image. If I don’t use the timer it works as expected BUT it is way too fast for me to control my stepper motors so I need to introduce a Timer. Incidentally if I add TexttoSpeech and speak the counter number it slows it down to a decent level BUT I don’t want that

If I replace the call do_stuff at the bottom of the edgereached event with timer.enabled = true edgereached carries on in the background with the do_stuff procedure getting triggered erratically. I have tried changing the timer interval from 100 to 2000 and it makes little difference.

I have also realised that the touchup event of the ball doesn’t get triggered if I move my finger past the outer edge of the button, which is understandable.

So the bottom line is, am I missing something simple here and is there a better way to do it as it’s a nice intuitive way to control my motors? I am new to this and loving the capabilities.

This is the Design screen BTW:-

Dear Dave,

maybe I’m coming on this topic too late and you have already solved your problem.

Anyway, since I’ve had the same, and I’ve been able to solve it, I would share how I did (at least, how it works for me :-)).

I have developed a digital dashboard for my old convertible car and I wanted to give voice commands like a hands on display, and w/o internet connection (off-line use).

I have used Puravida (thanks to Taifun !!!) extension so to have the speech recognizer always working without the need to hold down a button while speaking, and in off-line mode.

It was working perfecty on a Samsung and a Xiaomi phones, but on a chinese, low cost tablet, it was always giving me “server error”. The problem was due to the fact that the language (Italian) was not available as an off-line language, in the “voice input” settings of the tablet.

Luckily on the tablet the list of “possible” languages was comprehensive of the Italian, among others, so I just downloaded it from the web and it became available in the list of usable ones. Everything is feasible just by using the voice-off-line-configuration menu of the tablet (or phone).

As soon as loaded, the tablet has started to understand my speech.

Maybe this is not your case, if your mother tongue is English and the voice recognition works already with English language, but it might be helpful for other nationalities AI2 users.

Stay healthy