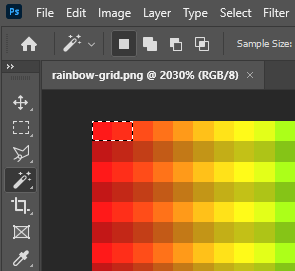

Getting somewhere. Made a pseudo rainbow image 81 x 81 pixels.

returns expected red colours of 204 and 199.

The blocks get the centre pixel for each of the "sections" of 9x9 pixels in the first column

Getting somewhere. Made a pseudo rainbow image 81 x 81 pixels.

returns expected red colours of 204 and 199.

The blocks get the centre pixel for each of the "sections" of 9x9 pixels in the first column

Ok. So there are a couple of issues here. First, the documentation is underspecified but the indexing on the Canvas (contrary to App Inventor conventions) starts at (0, 0) and goes to (width - 1, height - 1). Second, the Canvas bitmap is scaled based on the DPI of the device. This has an unfortunate side-effect of changing the behavior of the algorithm based on the screen density of the target device. For example, I ran the exact same code on an emulator representing a mdpi Android device and a xxhdpi device and got different results, both due to the fact that the scaling can result in bilinear interpolation of the pixel data and because on sufficiently high DPIs the pixels are duplicated in their entirety.

A long term solution would be to fix the Canvas API to be consistent across screen resolutions and to fit the 1-based indexing of App Inventor lists rather than 0-based indexing aligned with the Android API. However, both of these changes need to be done in a way that won't break existing apps.

Do you only need to use that 32x32 image you showed in your first post or your app will use any image in the canvas? If it's only that palette gradient, I MAY have a solution for that.

Exactly. In my opinion, correct reading of pixel colors at all resolutions is not possible now. The application user would have to experiment with the resolution.

And it would be very cool to control rgb led matrices by displaying canva images on them.

Hello all, my apologies for the extremely late reply - I had been working around hospitals (IT) and it's taken all of my time! I don't just need 32x32 images and I had a newer Android phone where it works much better (almost pixel for pixel relation). As such, I have taken the project a little further on (though my bluetooth is my issue now!).

I have got a working canvas to RGB LED example which I will post images of next. Right now it's opperating at a 64x64 resolution

Some examples for you all to see (and maybe inspire with)

I will get some more now I have a bit more time and work on this further. I think I might need to sample an image considering I will be able to make it larger than 64 pixels (currently also taking an image with the camera to reproduce on the LED strip)

@Jae_Chipko, this is another idea, you have a 32x32 = 1024 image

divide the image into squares of 8x8 = 64

find the average color of those squares.

Also in my view the band Is affecting this I think try with this image

![]()

Also think for a while do u think only the first pic be 255 I think the below pixel is also to be 255 as the picture be like 3-4 pixel light next below 3-4 pixel dark

So ur thought is just wrong

If u dont mind try putting the retuned values to image if u want proof both will be same

In the original image I used photoshop to make a colour gradient and then make darker bands in alternative pixels. When I try and extract the RGB values using the canvas it is working with phone resolutions. Pixel perfect, it would be top left 255 for Red and the next red value underneath should be 195. This was a test image with banding.

Nope I think no as the top left colour(red) and below colour is Same for me only then after 3-4pixel its dark red so the result should be right only approach is wrong

Is it the same when extracting it using GetBackgroundPixelColor - if so ,I think you are seeing the variance of android displays - the original image is per pixel as below:

Juan is right, to avoid errors, you need to have a region of the same colour, not just a single pixel. The Center Pixel of the region should hold the correct colour value. You also need to use something like Paint to produce the image, to avoid anti-alias, dithering and super-sampling that Photoshop etc may include automatically.

Fine just a thought

Seems you are going a round in circles.....

Actually, I felt bad becuase I didn't post in a couple of weeks but wanted to say I had managed to make it work on another phone. yes it would be nice to have per pixel coverage without processing it first but it's just a project that I started years ago and managed to get it so much further than I ever thought possible.

i am interested in your sampling method, I honestly thought a pixel by pixel reproduction was going to be the simplest but in reality (and what has been stated), the core canvas aspect would need revisions and a long term plan. This project all started from some lights that I coded to write text, I designed my own font 8 pixels high. This year (and after playing with AI2), I learned I could read pixels from an image. thankfully, I have it mostly working on another phone and other things are letting me down (my poor BLE data sending code!)

Suffice to say, I've had a lot of fun then coding wit the camera to take a photo to reproduce in RGB LEDs. I convert to a canvas image of 64x64 but as you are all saying, it'd be best to sample with the limitations of the canvas functionality.

These photos are from a propeller display?

I know it's not a proper question here but i'm just curious, does Kodular handle this the same way? Maybe @Anke or someone else who is familiar with that too can answer my question.

I just admit, I tried another free version of this and found the exact same problem (presumably they just built theirs atop of AI2 - AI2, in my opinion, is still the best)

My only solution without the excellent ideas listed (sampling areas) is to use another phone.

The photos are from LEDS mounted to a server rail lol!