No

The difference between free and paid is described here

Taifun

No

The difference between free and paid is described here

Taifun

You can use GroqVision for that

The model responds to any question asked in Arabic.

Model Name: Allam

Developer: SDAIA

Here's the ID: allam-2-7b

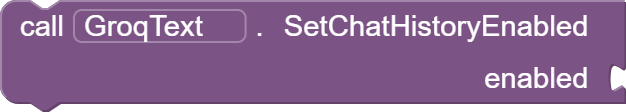

This is a major update with new blocks and awesome feature additions. Such as Chat history, System tone and Json structured output.

Disabled by default, Enable by setting the block to true

![]()

Eg. You are a developer.

UserRequest= Question to ask to the AI

customScheme= The json type to generate

For eg.

Output

Existing users, please dm me to get the latest version.

No more battling messy AI text! Say hello to clean, structured data with your own AI-powered features inside App Inventor.

Ever ask an AI something, only to get back a long blob of text that’s impossible to use without hours of parsing?

Wish you could just get exactly the info you need, in a format your app understands — right away?

it’s way simpler than you might think.

By combining the GroqText Extension with a smart prompt strategy — asking the AI to reply in JSON format — you can build reliable, custom AI tools that work seamlessly in your App Inventor apps.

Think of it like creating a lightweight, personal API — powered by Groq — without any server setup or advanced coding skills!

![]() On-Demand AI Features

On-Demand AI Features

Instant translations, summaries, keyword detection, emotion analysis — you name it. You can create mini-AI tools for almost any use case.

![]() Consistent, Structured Output

Consistent, Structured Output

By using JSON responses, you get clean data like { "summary": "...", "keywords": [...] }, ready to use in your blocks with no guesswork.

![]() Tidy, Simple Logic

Tidy, Simple Logic

Forget the spaghetti of text parsing. Your block logic becomes clean and easy to follow.

![]() You Write the “Function” as a Prompt

You Write the “Function” as a Prompt

Just describe what you want and how the response should look.

![]() Built-in Tools for Decoding

Built-in Tools for Decoding

use the inbuilt json parsing block for parsing text using field path.

![]() Easy Data Handling

Easy Data Handling

Use a simple "lookup in pairs" block to get the exact value you need from the dictionary — like the summary, translation, or anything else.

ExtractValuefromJSONNo external servers. No complicated parsing. No fuss.

Just visual blocks and the power of GroqText doing exactly what you ask — in a format that fits right into your app.

Ready to level up your projects? Install the GroqText extension, craft a clear JSON-based prompt, and start building smart, AI-enhanced features in minutes — right from App Inventor.

Now process Images using AI. GroqVision is now free with GroqText.

Grab both of them at 6$. Valid for existing users also. Dm me to get the extension.

The model that has overthrown Gemma, Mistral and Gemini 2.0

Llama 4 Scout, a 17 billion active parameter model with 16 experts, is the best multimodal model in the world in its class and is more powerful than all previous generation Llama models, while fitting in a single NVIDIA H100 GPU. Additionally, Llama 4 Scout offers an industry-leading context window of 10M and delivers better results than Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1 across a broad range of widely reported benchmarks.

Here's the ID: meta-llama/llama-4-scout-17b-16e-instruct

The model that has overthrown GPT 4o, Gemini 2.0 flash and DeepSeek v3

Llama 4 Maverick, a 17 billion active parameter model with 128 experts, is the best multimodal model in its class, beating GPT-4o and Gemini 2.0 Flash across a broad range of widely reported benchmarks, while achieving comparable results to the new DeepSeek v3 on reasoning and coding—at less than half the active parameters. Llama 4 Maverick offers a best-in-class performance to cost ratio with an experimental chat version scoring ELO of 1417 on LMArena.

Here's the ID: meta-llama/llama-4-maverick-17b-128e-instruct

How can I download this version

While Large Language Models (LLMs) excel at generating text, Compound-Beta takes the next step. It's an advanced AI system designed to solve problems by taking action and intelligently using external tools, alongside powerful Llama models.

compound-beta and compound-beta-mini.Both Compound-Beta systems support the following tools:

Note: Custom user-provided tools are not supported at this time.

User: make a query to the spacex api and print its response

AI: To make a query to the SpaceX API and print its response, I will use the requests library in Python to send a GET request to the API endpoint. Based on the search results, I found that the SpaceX API has several endpoints for different types of data. The most relevant endpoint for this task appears to be https://api.spacexdata.com/v4/launches/latest. This endpoint returns information about the latest launch.

Here is the Python code to make a query to the SpaceX API and print its response:

import requests

import json

def get_latest_launch():

url = 'https://api.spacexdata.com/v4/launches/latest'

try:

response = requests.get(url)

response.raise_for_status() # Raise an exception for HTTP errors

return response.json()

except requests.RequestException as e:

print(f"An error occurred: {e}")

return None

latest_launch = get_latest_launch()

print(json.dumps(latest_launch, indent=4))

This code sends a GET request to the https://api.spacexdata.com/v4/launches/latest endpoint and prints the response in a JSON format.

The response from the API includes various details such as the launch date, rocket used, crew members, and more. Here is an example of the response:

{

"fairings": null,

"links": {

"patch": {

"small": "https://images2.imgbox.com/eb/d8/D1Yywp0w_o.png",

"large": "https://images2.imgbox.com/33/2e/k6VE4iYl_o.png"

},

"reddit": {

"campaign": null,

"launch": "https://www.reddit.com/r/spacex/comments/xvm76j/rspacex_crew5_launchcoast_docking_discussion_and/",

"media": null,

"recovery": null

},

"flickr": {

"small": [],

"original": []

},

"presskit": null,

"webcast": "https://youtu.be/5EwW8ZkArL4",

"youtube_id": "5EwW8ZkArL4",

"article": null,

"wikipedia": "https://en.wikipedia.org/wiki/SpaceX_Crew-5"

},

"static_fire_date_utc": null,

"static_fire_date_unix": null,

"net": false,

"window": null,

"rocket": "5e9d0d95eda69973a809d1ec",

"success": true,

"failures": [],

"details": null,

"crew": [

"62dd7196202306255024d13c",

"62dd71c9202306255024d13d",

"62dd7210202306255024d13e",

"62dd7253202306255024d13f"

],

"ships": [],

"capsules": [

"617c05591bad2c661a6e2909"

],

"payloads": [

"62dd73ed202306255024d145"

],

"launchpad": "5e9e4502f509094188566f88",

"flight_number": 187,

"name": "Crew-5",

"date_utc": "2022-10-05T16:00:00.000Z",

"date_unix": 1664985600,

"date_local": "2022-10-05T12:00:00-04:00",

"date_precision": "hour",

"upcoming": false,

"cores": [

{

"core": "633d9da635a71d1d9c66797b",

"flight": 1,

"gridfins": true,

"legs": true,

"reused": false,

"landing_attempt": true,

"landing_success": true,

"landing_type": "ASDS",

"landpad": "5e9e3033383ecbb9e534e7cc"

}

],

"auto_update": true,

"tbd": false,

"launch_library_id": "f33d5ece-e825-4cd8-809f-1d4c72a2e0d3",

"id": "62dd70d5202306255024d139"

}

This response provides information about the latest launch, including the launch date, rocket used, crew members, and more.

This is the power of Groq Compound LLMs search, execute code!

The extension now supports 30+ llms including agentic llms with search and tool call such as code execution.

This is a major update with lots of new blocks ![]() and improvements.

and improvements.

The extension now supports Vision models. You will be able to ask questions about images.

The documentation is updated at the top

If you have already purchased the extension, you will receive a mail with the new extension soon.

Keep Building

Sarthak Gupta

can you send aia?

Sharing paid versions of extensions is strictly not allowed. See extension terms. Its piracy even inside AIA.

@TechInterest if you want to share the AIA with @Prem_Gupta share it with the extension removed.

PS: It's fine if you are using GroqTextMini (free version)

Wrote an in depth guide about Groq API and the benefits you get in free plan.

i never sent anything

Brother, @TechInterest make upper a project with free aix then I said to send aia, if possible pls send aia @TechInterest