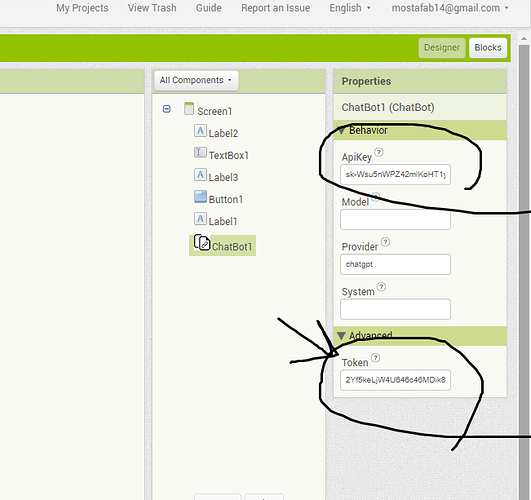

This photo is related to api test in chatbot

No. The server will always prefer to use the ApiKey property, if set.

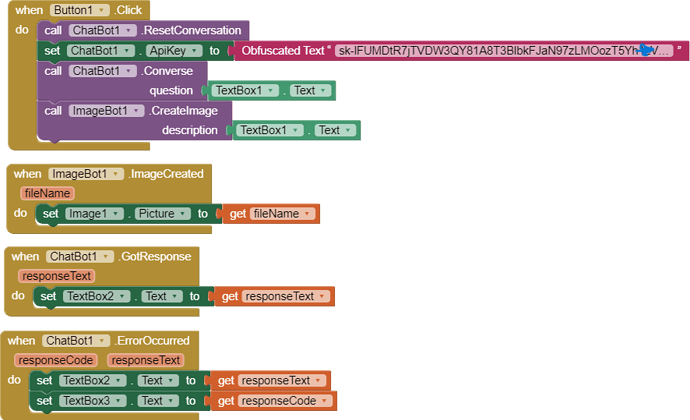

I am using my own API and the program was working until yesterday, but since yesterday it is not working. I have tried several times.

I send aia test files (with my own API key), one is with Chatbot and one is with OpenAI extension. Please see both. Chatbot doesn't work, but OpenAI extension works.

Please help me

chatbottest.aia (2.3 KB)

chatopenai.aia (133.5 KB)

Hi I'm facing the same issue of getting the Error 4200 stating exceeding I've exceeded the quota. I'm using my own API key from OpenAI and the app I've built has been working perfectly fine, until last evening when I started getting this message. My OpenAI API key is working perfectly fine in other systems and apps. Any advice is greatly appreciated

Are you in a country that has banned AI ? Chatbot error 404 (ChatGPT is unavailable in some countries ) - #4 by SteveJG Your location might be part of the issue.

or The (404 Usage Over Quota) error of ChatBot Component - #4 by SteveJG

or you might not have actually paid your bill ![]()

I also encountered this problem

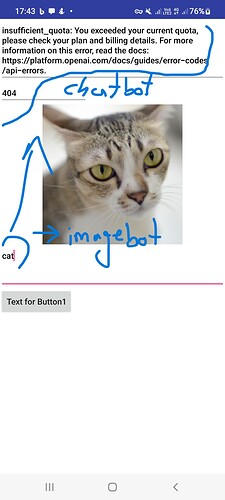

I use my own API, the image bot works, but the chat bot does not work

No, I use chatgpt

Also, the image bot is working.

it's been two days that the chatbot is not working even with my own API key.

Have you used a chatbot recently?

Yes, using the MIT defaults. Worked earlier in the month but as MIT indicates the free quota MIT supports was used up earlier in the month and it resulted in the 'exceeded your current quota' message. The ChatBot component works perfectly well using the defaults until quota for everyone is exhausted.

I use my own API, so why should the chatbot stop for me?

This * ChatBot indicates what you are doing should work.

I asked the MIT technical staff to tell us what they think. They might respond Monday this being their time off Sunday.

thank you

TL;DR

Try it again, it should now work!

Read on if you are interested in the details...

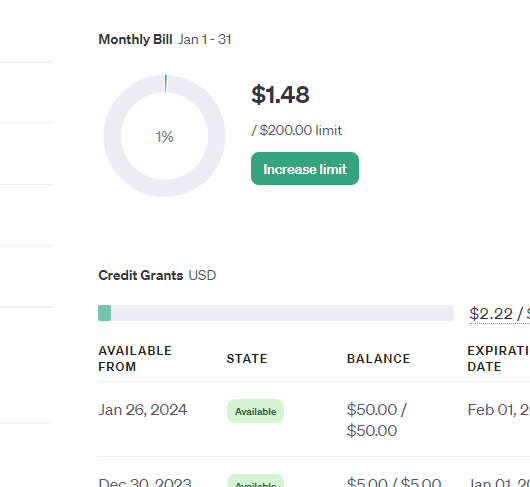

So MIT's account was over quota. Normally, you would expect that this should not have an impact on people using their own API keys. However, we call ChatGPT's "moderation" API on all requests, using our own API key, even if someone provides their own API key. We didn't see the need to use someone else's as the moderation API doesn't cost anything to use.

However, it turns out that OpenAI shuts off the use of the moderation API when your (or in this case, our) account is over quota. So because MIT's account was over quota, no usage of ChatGPT, even when someone provides their own API key, will work!

We added more money to our account, so we are now no longer over quota.

few questions

1- Is my API not being used?

2- As a developer, can I publish my program with my own API or not?

3- Should I worry about the next interruptions?

1- Is my API not being used?

2- As a developer, can I publish my program with my own API or not?

3- Should I worry about the next interruptions?

- Your API key is being used for the actual queries to ChatGPT. Our key is used for the moderation API, which we call before making the actual call to ChatGPT. The moderation API flags inappropriate prompts.

- Yes, you can publish with your own API key.

- There are no guarantees in life. The ChatBot component directs queries to a proxy that we (MIT) operate. This permits us to add new providers and models without having to release new versions of MIT App Inventor. We work diligently to keep this proxy infrastructure running, but it is offered without warranty.

Thanks

Please provide conditions for those using your API that the chatbot does not disconnect

I'm not sure what you are asking here.

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.